The Most Expensive Problem No One Budgeted For

Companies are investing billions in GPUs, accelerators, and high-performance AI clusters. But the hidden cost isn’t in the silicon, it’s in managing the extreme heat they produce.

Once a background function in enterprise data centers, cooling is now moving to the forefront.

In the AI era, cooling is no longer optional; it’s what sustains the entire system at scale.

With AI models growing larger and GPUs getting denser, racks are now regularly exceeding the 50 to 80kW threshold, and the economics of cooling are becoming impossible to ignore.

Efficiency metrics like PUE still matter, but they no longer tell the whole story.

The real question for operators now is simple: how much are we spending just to keep every watt of AI compute running within thermal limits?

At the Uptime Institute briefing in 2024, Executive Director of Research Martin McCarthy emphasized:

“AI workloads are changing the economic profile of data centers. Cooling is no longer a secondary consideration; it’s a limiting factor.”

That shift in physical infrastructure spend is now being captured by a once-overlooked metric: the power-to-cooling cost ratio.

Understanding the Power-to-Cooling Cost Ratio

At the center of this transformation lies a metric few executives even tracked until recently, the power-to-cooling cost ratio. It poses a fundamental but critical question: for every dollar spent powering compute, how much must be spent cooling it?

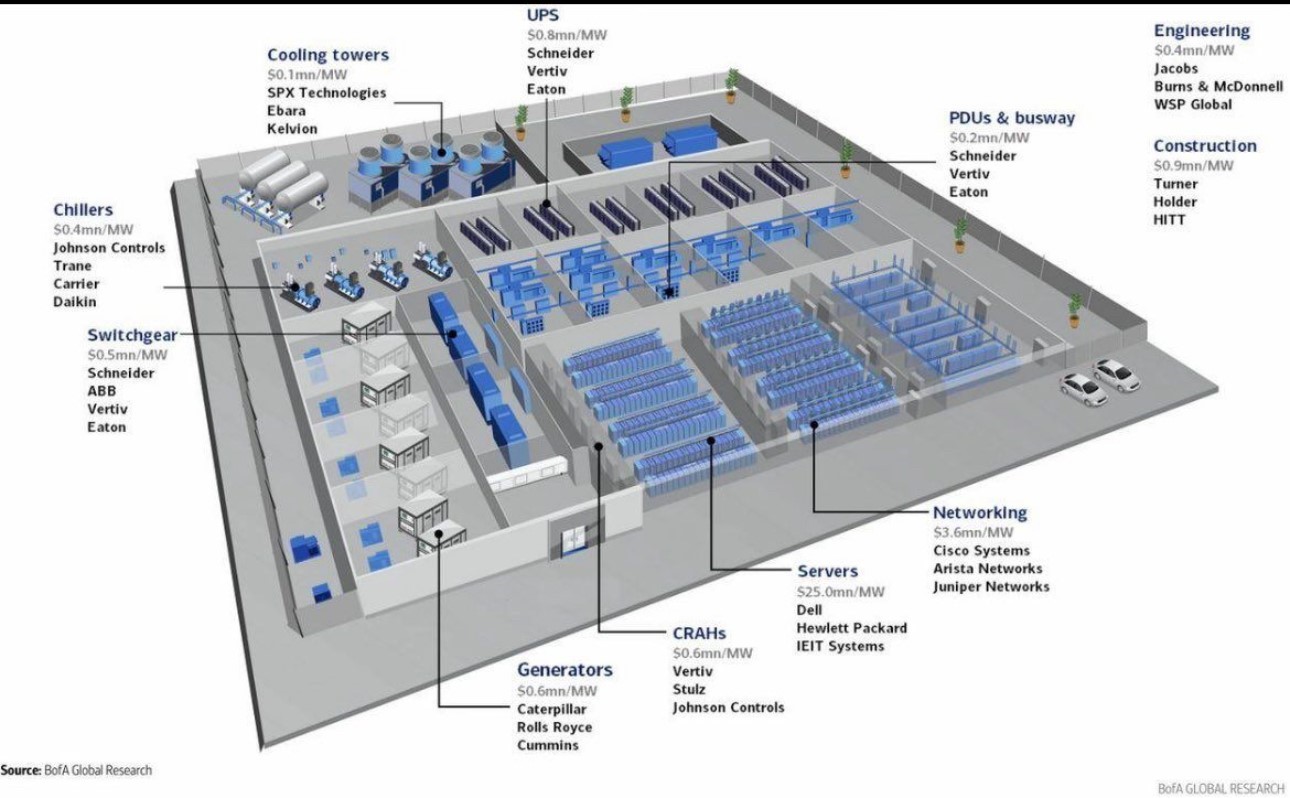

According to the BofA Global Research Infrastructure model, servers now account for USD 25 million per megawatt (MW), which is the largest share of the data center’s capital. Cooling systems, including CRAHs, chillers, and cooling towers, add over USD 1.1 million per megawatt (MW)

Including UPS for USD 0.8 m/MW, generators USD 0.6 m/MW, switchgear USD 0.5 m/MW, and PDUs & busway USD 0.2 m/MW, the total power infrastructure costs climb to roughly USD 4.5 million per MW, which is about 15 percent of overall capex.

If the rack densities continue to climb as they have, the cooling expenditure in ultra-dense AI facilities could approach USD 2 million per MW, which is nearly double the USD 1.1 million per MW baseline identified in the BofA Global Research infrastructure model.

These numbers underline two realities: cooling has graduated from a back-office duty to a boardroom priority, and any credible ROI model for AI infrastructure must account for the full cost of compute and cooling at scale.

PUE and the Disappearing Efficiency Margins

The most widely used metric for thermal efficiency is Power Usage Effectiveness (PUE), which compares the total power consumed by a data center, including cooling, lighting, and power distribution, to the power used solely by its IT equipment.

A PUE of 1.0 represents perfect efficiency, where every watt of power is used exclusively for IT equipment, with none lost to cooling or any other overhead systems.

Hyperscalers have long optimized for low PUEs, but the demands of AI workloads are beginning to test the limits of that efficiency model. Meta, for instance, reported an average global PUE of 1.09 across its data centers in 2023, highlighting just how challenging further efficiency gains have become.

“PUE served data centers well during the rise of cloud computing…and it will continue to be useful,” says Jeremy Rodriguez, Senior Director of Data Center Engineering at NVIDIA. “But it’s insufficient in today’s generative AI era, when workloads and the systems running them have changed dramatically.”

AI workloads are becoming more complex, making PUE increasingly insufficient. The power-to-cooling cost ratio offers a clearer measure of the thermal and financial strain modern computing places on infrastructure.

Why Air Cooling Is Losing Ground

Most enterprise data centers still rely on air-based cooling, but at 50 kW per rack and beyond, air simply can’t manage heat loads efficiently. Fans become louder, CRAHs consume more power, and thermal stress shortens hardware lifespan.

This is why more operators are now shifting to liquid cooling, including immersion and direct-to-chip (D2C) systems.

A 2025 vendor-neutral TCO study by The Datacenter Economist shows that immersion cooling initially costs 20–30 percent more in capital expenditure than air systems, but delivers up to 40 percent savings in operating expenditure in dense AI environments

“By removing unnecessary components and creating an obstruction-free environment, the fluid can flow freely over the hardware, improving heat dissipation and thermal performance. This design ensures optimal cooling across all components, extending hardware lifespan by avoiding the thermal stress caused by fluctuating fan speeds in air-cooled systems,” explains Darcy Letemplier, Vice President of Global Engineering at Hypertec.

Layered Workloads Need Layered Cooling

AI workloads are not uniform. Each layer, from training, inference, to fine-tuning, carries a unique power and heat profile. That makes one-size-fits-all cooling not just inefficient but expensive.

A smarter approach is to match thermal systems to workload intensity, immersing high-power training clusters, using D2C for steady inference loads, and reserving air cooling for lighter or legacy workloads.

|

AI Layer |

Power Demand |

Heat Load |

Recommended Cooling Strategy |

|

Model Training |

Very High |

Sustained |

Immersion / Direct-to-Chip (D2C) |

|

Inference |

Moderate |

Continuous |

Direct-to-Chip |

|

Edge / Light Tuning |

Variable |

Burst |

Air or Hybrid |

Note: This table is a helpful illustration; each cooling method aligns with workload type based on power intensity and duty cycle.

This alignment isn’t theoretical; it’s quickly becoming standard practice. In their 2025 guide to building AI “factories,” NextDC notes:

“Direct chip cooling, two-phase immersion baths, and rear-door heat exchangers are deployed to dissipate heat that air cooling simply cannot handle.”

This mirrors Vertiv’s observations, too, noting that rack densities pushing 50 kW and above are “pushing the capabilities of traditional room cooling methods to their limits.”

Cooling Is Now an ESG Flashpoint

Cooling is no longer just an operational expense; it is becoming a climate liability and a regulatory priority.

In Singapore, multiple outlets report that they’ve been imposed with a moratorium by the government on new data centre approvals, citing concerns over energy and water usage. In Ireland, EirGrid, a state-owned electric power transmission operator, has halted new connections in the Dublin region until 2028, to prevent power shortages as data centres began consuming over a fifth of the country's electricity.

In both cases, cooling systems are in the spotlight.

Tech giants are already responding to the issue. Google treats data center sustainability as a non-negotiable aspect. What began as an environmental initiative is now a foundational mandate.

Urs Hölzle, Google’s Senior Vice President of Technical Infrastructure, explains, “24/7 carbon-free energy (CFE) is far more complex and technically challenging than annually matching our energy use with renewable energy sources… No company of our size has achieved 24/7 CFE before.”

Microsoft also reports that its latest AI-optimized data centers are engineered to use zero water for cooling, with the explicit goal of minimizing freshwater use as compute demands grow. As stated in their 2025 Environmental Sustainability Report,

“Our new datacenters are designed and optimized to support AI workloads and will consume zero water for cooling. This initiative aims to further reduce our global reliance on freshwater resources as AI compute demands increase.”

For enterprises, power-to-cooling ratios are no longer just technical or TCO metrics; they’re fast becoming ESG disclosures in disguise, subject to audits, regulatory oversight, and growing public scrutiny.

Final Take

Cooling is no longer a backend operational detail; it’s a front-line constraint shaping the economics, sustainability, and scalability of enterprise AI infrastructure. As workloads intensify and rack densities rise, the power-to-cooling cost ratio has emerged as a more telling metric than legacy efficiency benchmarks like PUE.

This shift carries real financial and environmental weight. Whether it’s immersion systems keeping training clusters stable, or regulatory caps in Singapore and Dublin forcing design pivots, the message is clear: cooling is now a strategic design variable, not a downstream consideration.

For enterprises investing in AI at scale, this means recalibrating infrastructure plans, not just around performance and cost, but around heat.

Because in this new AI era, the bottleneck isn't compute, it’s what you do with the heat it leaves behind.