The line between theoretical and real computing power is beginning to blur. Quantum machines, once seemed unimaginable, are now close to achieving in seconds what classical supercomputers would need millennia to process, a shift that will redefine how data centres are built and run.

As this shift accelerates, quantum computing is moving from a laboratory curiosity to a technology with tangible impact. It is forcing data centre operators to confront a new class of workloads and infrastructure demands. Classical architectures, built for sequential processing, now struggle to keep pace with the demands of AI training, optimization, and large-scale simulations. Quantum systems approach these problems differently, using qubits that exist in multiple states at once to explore many possibilities simultaneously, a capability that allows them to tackle certain computations far beyond the reach of classical machines.

For operators, the urgency is clear. Quantum hardware brings new physical demands, from cryogenic cooling and electromagnetic shielding to redesigned power and spatial layouts. But the shift runs deeper than infrastructure. It will reshape how workloads are orchestrated, how facilities operate, and even how revenue models evolve. Quantum-as-a-service platforms, specialized compute zones, and accelerated research ecosystems are already emerging as signs of what’s ahead. Preparing for this transition is no longer a technical choice; it is a strategic imperative for competitiveness in the next era of computing.

What is Quantum Computing and How Does It Differ from Classical Computing?

Quantum computing represents a fundamental shift in how information is processed, offering capabilities far beyond those of classical systems. Unlike traditional computers, which rely on bits that exist as either 0 or 1, quantum computers use qubits. Through superposition, a qubit can represent multiple states simultaneously, allowing quantum machines to explore numerous computational paths at once.

Combined with entanglement, where qubits become interdependent regardless of distance, this enables operations that classical architectures cannot perform efficiently.

.jpg)

Purpose: Show how a qubit can exist in multiple states (superposition) compared to a classical bit.

Classical Bit vs Quantum Bit (a Qubit in Superposition)

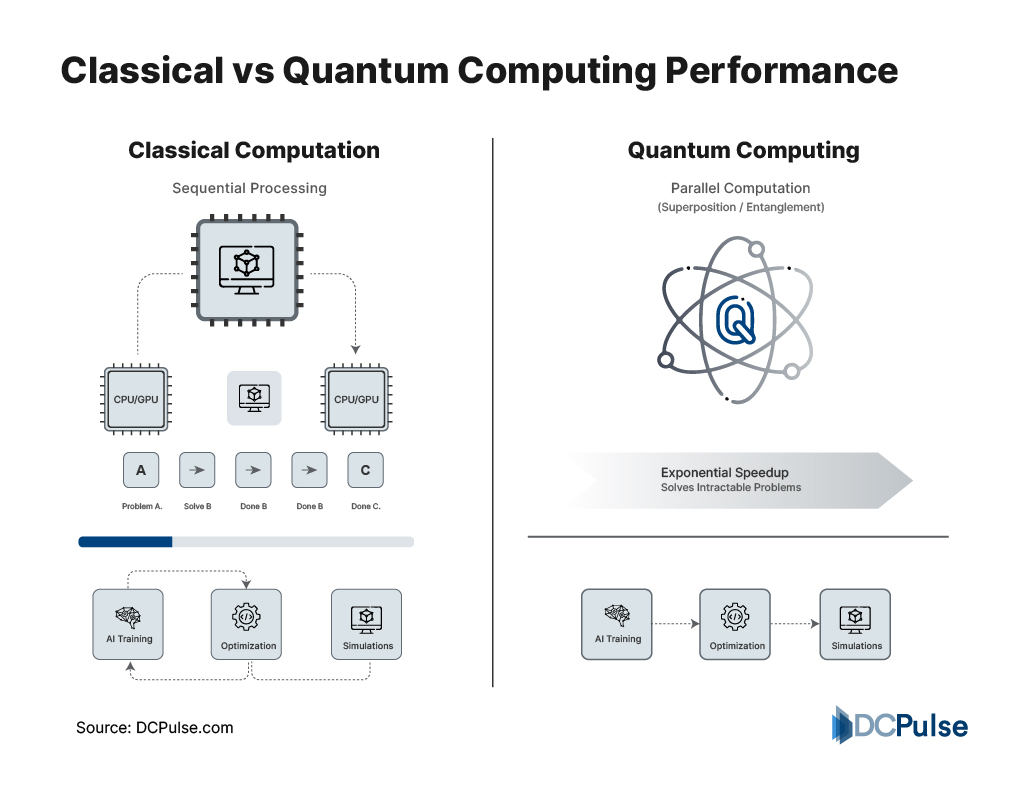

Classical computers execute operations sequentially, which is sufficient for many everyday tasks, but they struggle with AI training, optimization problems, and large-scale simulations. Quantum computers, by processing information in parallel, can approach these workloads more efficiently.

Purpose: Illustrate how classical sequential computing differs from quantum parallel computation, highlighting workloads like AI training, optimization, and large-scale simulations.

Classical vs Quantum Computing Performance

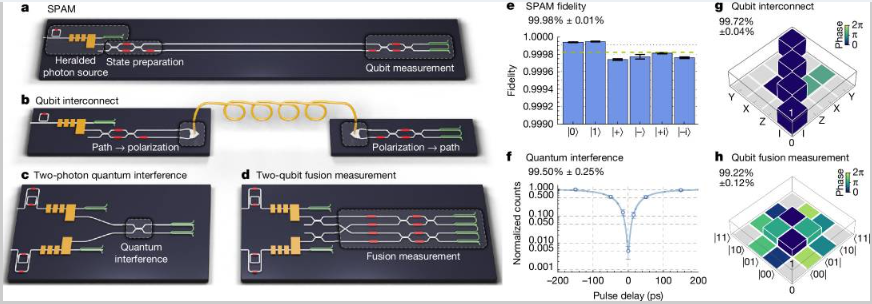

Quantum computing isn’t a single, uniform technology; it comes in several distinct forms. Gate-based systems are the most common, using quantum logic gates to control qubits and perform a wide range of computations, much like the processors in classical computers, but on a quantum level. Quantum annealing, on the other hand, is built for solving optimization problems by guiding the system toward its lowest energy state, essentially finding the most efficient solution among countless possibilities. Then there’s photonic quantum computing, which encodes qubits in light particles, or photons. This approach is especially promising because it could scale more easily and offer greater resistance to errors.

Understanding these principles is essential for data centre operators and technology strategists as Quantum computing will reshape workloads, infrastructure requirements, and operational strategies, and preparing early could allow a competitive advantage. Emerging commercial deployments already hint at its transformative potential.

How Must Data Centres Evolve to Handle Quantum Computing and Its Workloads?

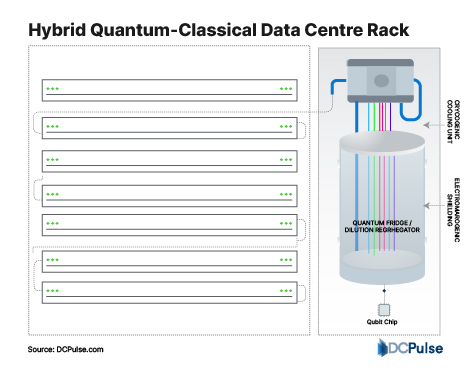

Quantum computing introduces a new class of computational demands that data centres cannot meet with traditional infrastructure alone. Qubits, unlike classical bits, require extremely stable environments, precise temperature control, and protection from electromagnetic interference. As a result, operators must rethink not only cooling and shielding but also power distribution, spatial layout, and hybrid integration with classical systems.

Hybrid Quantum-Classical Data Centre Rack

These physical adaptations are inseparable from operational transformations. Quantum workloads often run many tasks at once and rely on probability rather than fixed outcomes, require low-latency connectivity and sophisticated orchestration between quantum and classical systems. Hybrid scheduling helps both types of machines work together smoothly, keeping performance high and operations stable.

Orchestration Between Classical and Quantum Workloads.

(Hybrid workflow diagram showing orchestration between classical and quantum workloads.

Purpose: to show a hybrid workflow, highlighting the interplay between classical processors and quantum co-processors.)

The implications extend beyond infrastructure. Data centres must adopt new operational strategies, from monitoring and error correction to workload prioritization and security. Early deployments of quantum-as-a-service platforms demonstrate how specialized compute zones and integrated software layers can accelerate research, AI training, and optimization tasks.

Preparing for these changes is not optional. Facilities that proactively integrate quantum hardware and operational practices will gain a competitive advantage, enabling faster AI inference, complex simulations, and optimized workflows. The combination of physical infrastructure and adaptive operations defines the next generation of data centres, ones capable of supporting the quantum revolution while seamlessly coexisting with classical computing environments.

Who Are the Companies Driving Quantum Computing, and How Are Their Initiatives Shaping the Future of Data Centres?

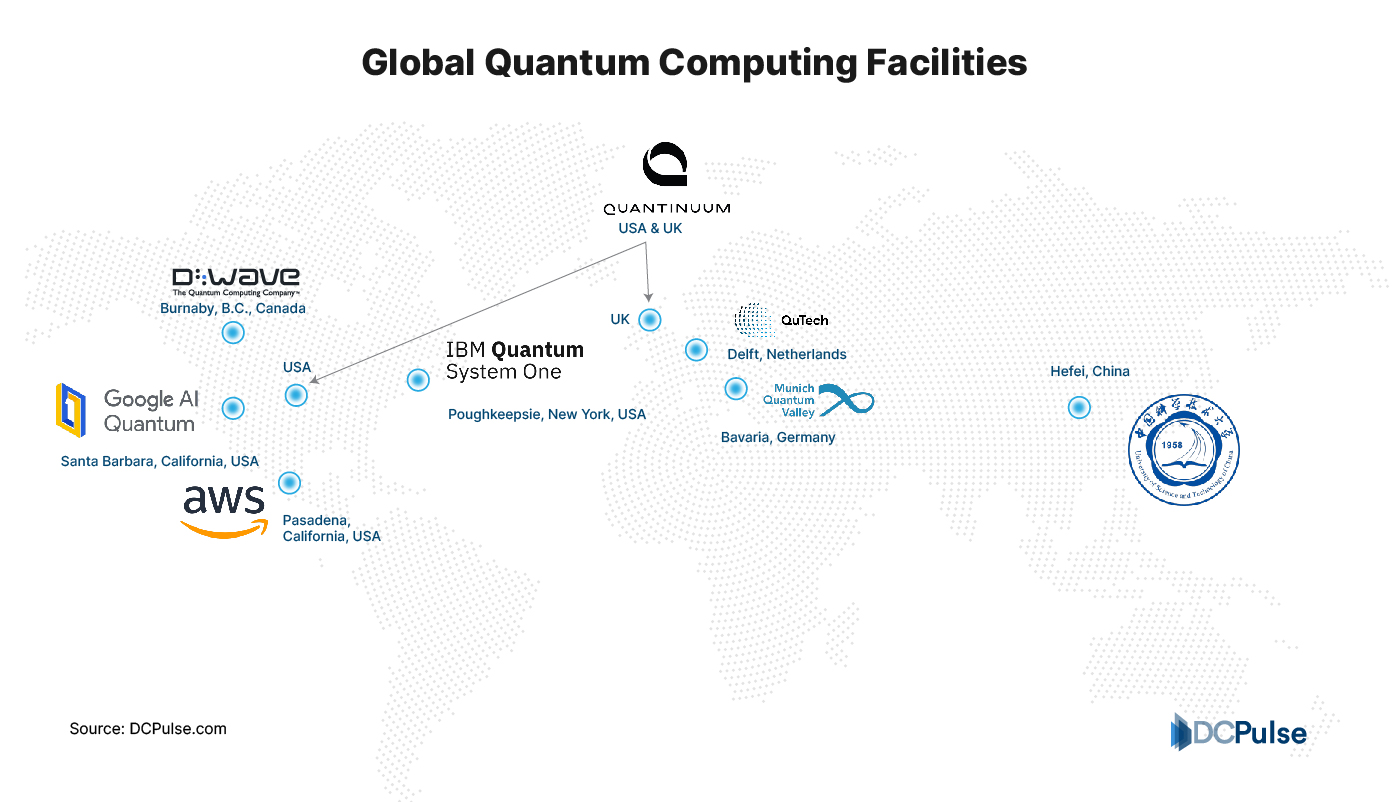

Quantum computing is no longer confined to laboratories or theoretical papers; it is rapidly moving into tangible, operational deployments. Leading technology companies are building the hardware, software, and infrastructure required to integrate quantum systems into real-world computing environments, providing a blueprint for the next generation of data centres.

Global Quantum Computing Facilities

PsiQuantum is pursuing a million-qubit goal using photonic qubits, a technology that operates at room temperature but requires precise optical components and low-loss photonic circuits. Their pilot facility in Chicago is designed to test large-scale photonic integration, demonstrating how quantum hardware can coexist with classical infrastructure.

Two-qubit fusion measurement on an integrated photonic circuit, illustrating PsiQuantum’s low-loss optical architecture:

Quantum Operation Performance Metrics (Not Accounting for Loss)

|

|

Metric |

Experimental Value (%) |

Notes |

|

Single-qubit |

SPAM fidelity |

99.98 ± 0.01 |

Standard measurement

|

|

|

|

99.996 ± 0.003 |

Measured with bright light and off-chip detectors

|

|

|

Chip-to-chip fidelity |

99.72 ± 0.04 |

Indicates inter-chip communication quality

|

|

Two-qubit |

Quantum interference visibility |

99.50 ± 0.25 |

Reflects coherence in two-qubit operations

|

|

|

Bell fidelity |

99.22 ± 0.12 |

Measures entanglement quality between qubits |

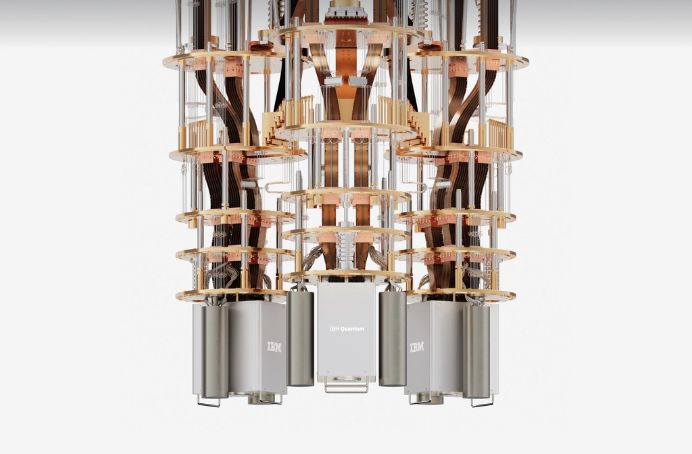

IBM has advanced superconducting qubits through its European Quantum Data Centre, which houses IBM Quantum System Two. The facility combines hybrid classical-quantum orchestration, enabling experimental workloads and research pipelines to run alongside conventional servers. IBM’s approach highlights how scaling quantum machines impacts power distribution, cooling strategies, and hybrid software layers.

IQM Quantum Computers, based in Munich, focuses on scalable superconducting qubits. Their quantum facility emphasises modular designs that facilitate both qubit growth and integration with classical compute clusters, providing insights into spatial planning and energy management for future hybrid data centres

OQC, in collaboration with Digital Realty, is building a quantum-AI data centre in New York City. This hybrid facility integrates early-stage quantum processors with AI workloads, demonstrating the operational and infrastructural adjustments required to run quantum systems alongside high-performance classical servers

Amazon Braket, as a managed quantum computing service, allows organizations to access multiple qubit architectures via the cloud. While not a physical data centre operator in the traditional sense, Braket provides a model for hybrid orchestration, enabling companies to test quantum workloads and integrate them with existing infrastructure.

Together, these initiatives highlight how quantum computing is shaping data centre evolution. Operators can learn from these early deployments: considerations around cooling, shielding, hybrid scheduling, and low-latency interconnects are no longer theoretical.

The actions of these companies today are providing a roadmap for the data centres of tomorrow, demonstrating both the technical challenges and operational strategies required to integrate quantum computing at scale.

What’s Holding Quantum Back?

Despite rapid advances, quantum computing still faces hurdles that keep it from becoming a routine tool for data centers. At the core is the fragility of qubits. Even the slightest disturbance, a vibration, a stray magnetic field, or tiny temperature shift, can corrupt calculations in microseconds. This makes error correction essential, but it also creates a major scaling challenge.

Traditional approaches, like IBM’s surface code, require hundreds of physical qubits to reliably encode a single logical qubit. IBM’s newer qLDPC (quantum low-density parity check) codes are a significant improvement, reducing the number of extra qubits needed while keeping error rates manageable.

For instance, IBM’s Gross Code [[144,12,12]] demonstrates that 144 physical qubits can reliably store 12 logical ones — far fewer than older schemes would require.

Error-Correction Overhead Comparison

|

Code |

Scaling of Physical Qubits (n) to Achieve Distance (d) |

Accuracy |

|

Surface Code |

n∝d2⋅polylog(d) |

Highly Accurate. This is the widely accepted theoretical overhead for the 2D Surface Code, showing the quadratic growth. |

|

qLDPC Codes |

n∝dϵ⋅constant |

Highly Accurate (Theoretical). This represents the theoretical class of qLDPC codes, where the goal is to achieve near-linear scaling (ϵ≈1 or slightly greater). |

|

Gross Code |

n∝constant (Goal) |

Accurate (Goal-Oriented). The most advanced qLDPC constructions, like the Gross Code, are designed to achieve a scaling where the overhead n/k is constant (or "almost constant" as IBM terms it), meaning the number of physical qubits required per logical qubit does not grow with increasing code distance. This is the Holy Grail of QEC. |

Technical obstacles, however, are only part of the story. Quantum computing is tightly regulated. The U.S. Department of Commerce has imposed export controls covering quantum hardware, software, key components, and related materials. These controls limit exports and re-exports, affecting international collaboration and slowing commercial deployment. For data centers and enterprises looking to work across borders, these rules are a real operational constraint.

Global policy frameworks add another layer of complexity. The OECD’s 2025 Quantum Technologies Policy Primer highlights how uneven regulatory, governance, and standardization practices across countries create friction for adoption. The primer details national strategies, investment levels, and workforce readiness, showing that some countries are heavily investing and cultivating talent while others lag behind. This fragmented landscape makes it harder for organizations to plan large-scale deployments and align with international standards.

Finally, even with the right hardware and supportive policies, quantum computing requires specialized human expertise. There simply aren’t enough trained professionals to meet the growing demand, creating a talent bottleneck that slows research, development, and operational scaling. For data centers, this human factor is as critical as the machines themselves.

Taken together, these challenges, fragile qubits, heavy error-correction overhead, strict export regulations, uneven policy frameworks, and a global talent shortage, define the frontier of quantum computing today.

The technology is making undeniable strides, but practical, large-scale adoption remains a complex puzzle, demanding careful navigation across technical, regulatory, and human landscapes.

The Quantum Data Centre of Tomorrow

The road ahead for data centers is both exciting and challenging. While fragile qubits, error-correction overheads, regulatory restrictions, and talent gaps define the current frontier, they also provide a clear roadmap for where action is needed.

Data centers that proactively embrace quantum technologies will do more than adapt; they will redefine efficiency, capability, and competitiveness. Hybrid architectures, combining classical and quantum systems, will become standard, with specialized racks, advanced cooling, and low-latency interconnects supporting workloads that were previously unimaginable. Quantum-as-a-service models and integrated orchestration software will transform operational strategies, allowing facilities to balance classical tasks with quantum-accelerated processes seamlessly.

Strategic investments in talent, infrastructure, and regulatory compliance will be equally important. Operators who build skilled teams, align with global standards, and anticipate policy and export challenges will be poised to leverage quantum’s advantages faster and more safely than competitors.

In essence, quantum computing is not just an incremental step; it is a paradigm shift. Data centers of the future will be hybrids in every sense, hybrid in technology, hybrid in operations, and hybrid in strategy.

Those who prepare today will be the ones defining the next era of computing, turning the promise of quantum from theoretical potential into tangible, real-world performance.