Businesses are moving AI workloads from traditional cloud environments to colocation facilities in 2025. Many early adopters used public cloud services to develop AI and ML, but a recent survey of shows that IT leaders and business owners are increasingly choosing colocation because it helps them control costs, scale up, and maintain data privacy.

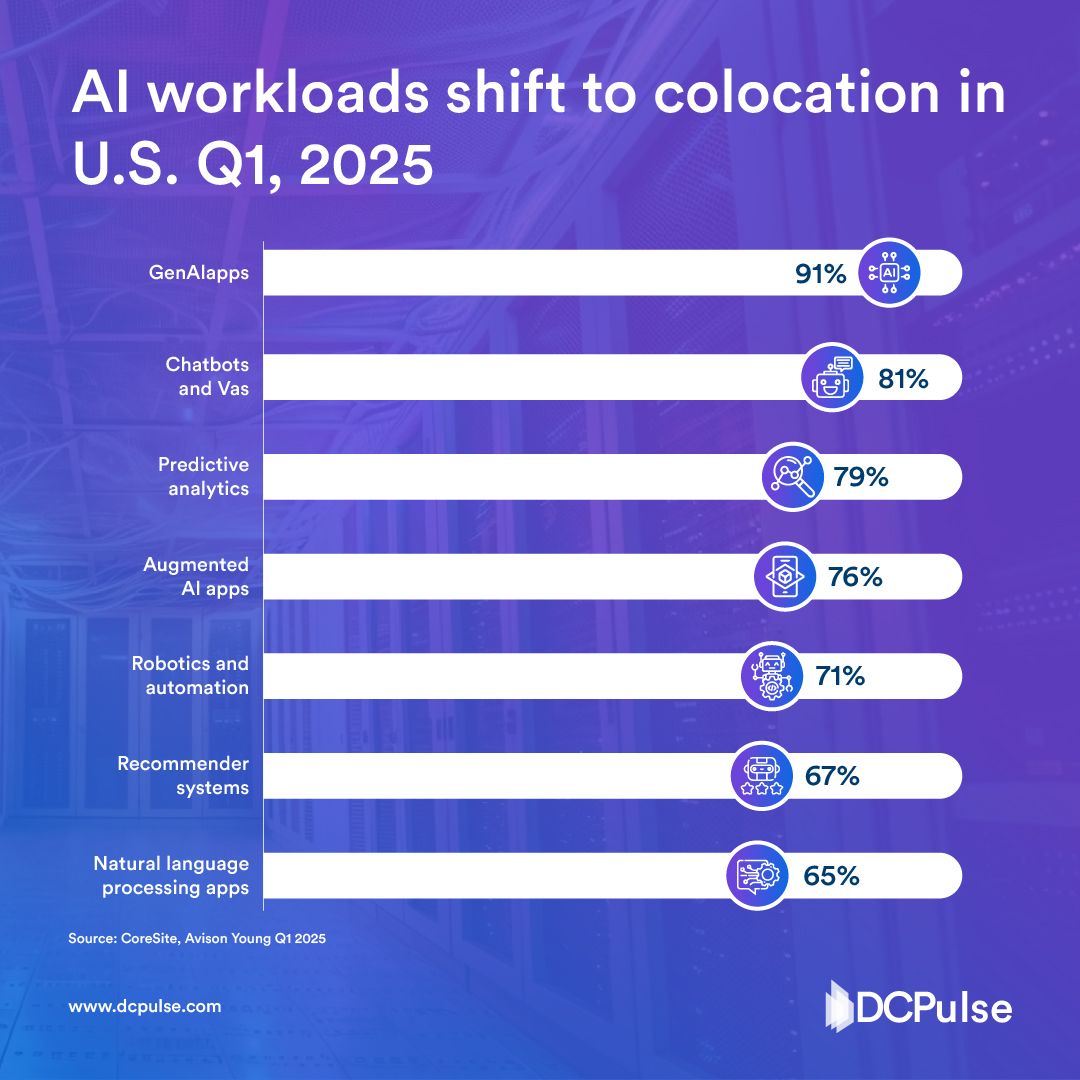

A recent survey shows that 91% plan to use GenAI apps in colocation settings, followed by chatbots and VAs at 81% and predictive analytics at 79%. Augmented AI apps (76%), robotics and automation (71%), recommender systems (67%), and NLP apps (65%) also indicate strong interest in migration. This trend illustrates how companies are adjusting the balance between cloud-native and colocation models to better handle heavy AI workloads while also improving compliance and operational resilience.

AI workloads require substantial computing power, especially GPU clusters. Many businesses cannot upgrade their legacy on-premises data centers to handle the power, cooling, and rack densities that large AI clusters demand. Colocation facilities, especially Tier III+ providers, are investing heavily in liquid cooling, high-density power setups, and modular expansions to attract AI tenants.

Modern colocation campuses are becoming interconnection hubs. Direct fiber routes to major cloud providers (AWS, Azure, GCP) and a broad range of carrier ecosystems make it easier for AI workloads to move between on-premises, cloud, and colocation environments without incurring expensive backhaul costs.

According to CoreSite, companies are migrating an increasing volume of AI workloads to colocation data centers. For example, the share of generative AI applications moved to colocation data centers will rise from 42% in 2024 to 45% in 2025. The proportion of recommendation systems will increase from 36% to 47%, and the share of augmented AI applications will grow from 41% to 44%. The primary drivers for this shift are performance, cost, and the ability to leverage both environments.

Many IT teams are choosing colocation over the cloud for AI and ML workloads because they require high compute density and low latency. Colocation data centers also provide greater control over infrastructure location, network configuration, and hardware management. This level of control is not feasible in the cloud.

Another reason colocation is advantageous for AI is its cost efficiency. Long-term use of AI in the cloud can be very expensive, particularly for training and inference at scale. Colocation offers a more predictable cost structure and significantly lower data egress fees.

That does not mean businesses will abandon the cloud for AI workloads entirely. A hybrid approach that combines cloud and colocation enables organizations to run AI workloads where they perform best. Colocation forms the foundation for AI infrastructure and allows IT teams to leverage a wide range of cloud-native services.