Data centers aren’t just being monitored anymore; they’re being mirrored. In the operations room today, you can often see two facilities on the screen: the physical one outside, and a live digital model running simulations in real time. It predicts airflow shifts, power swings, cooling inefficiencies, and the impact of new workloads before they land.

This shift arrived quietly, pushed forward by rising AI density and the need to run closer to capacity without compromising stability. Where Traditional dashboards react, digital twins anticipate. They rehearse failures before they happen and validate changes without touching a single cable or CRAC unit.

For operators under pressure to build faster, run tighter, and waste nothing, this marks the start of something bigger: an intelligence layer woven directly into the infrastructure itself.

What’s Driving the Shift Toward Digital Twins in Data Centers?

For years, digital twins felt like a concept reserved for aerospace and manufacturing. In data centers, they sat at the edges, interesting but not essential. That changed when the moment density, AI loads, and hybrid infrastructure began moving faster than operators could observe them. Today’s facilities don’t just need monitoring; they need foresight. And that’s where digital twins are finding their moment.

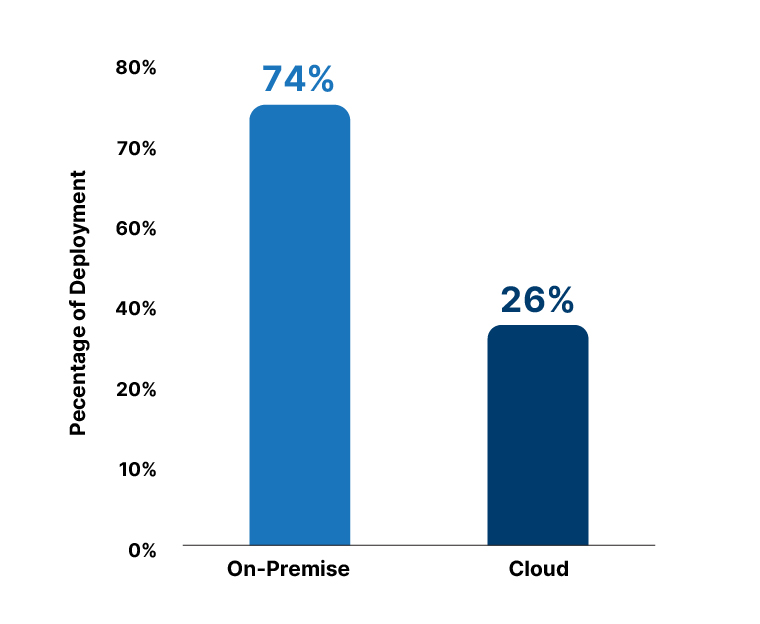

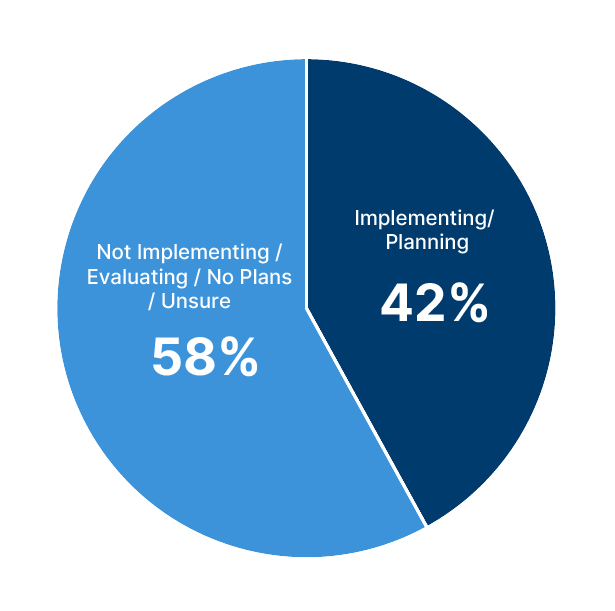

Industry surveys now confirm the shift. Digitalisation World reports that 42% of data center decision-makers are already using or planning to use digital twins to strengthen operational visibility and reduce unexpected failures. It’s a signal that the market has moved beyond experimentation and into early mainstream adoption.

Digital Twin Adoption Among Data Center Decision-Makers

The business case is accelerating. The data center digital twin market, valued at USD 20.19 billion in 2024, is projected to reach USD 396.62 billion by 2033, expanding at a roughly 39.2% CAGR. Investors and operators see twins not as a tool, but as an automation layer, one that models power, cooling, airflow, and failure paths before real-world changes are made.

Digital Twin Market Growth Forecast (2024–2033)

.jpg)

Deployment models offer a clearer picture of just how central digital twins are becoming to day-to-day operations. Research notes that on-premises systems represent over 74% of digital twin adoption today, showing that operators prefer tight control and deep integration with BMS, SCADA, and EMS systems

As facilities grow more complex and AI workloads more volatile, digital twins are moving from optional to inevitable, becoming the real-time intelligence layer operators rely on to run tighter, faster, and with fewer surprises.

On-Premises vs Cloud Deployment of Digital Twins

The New Intelligence Layer Inside the Data Center

In the digital-twin era, data centers are no longer just physical infrastructure; they’re becoming living simulators.

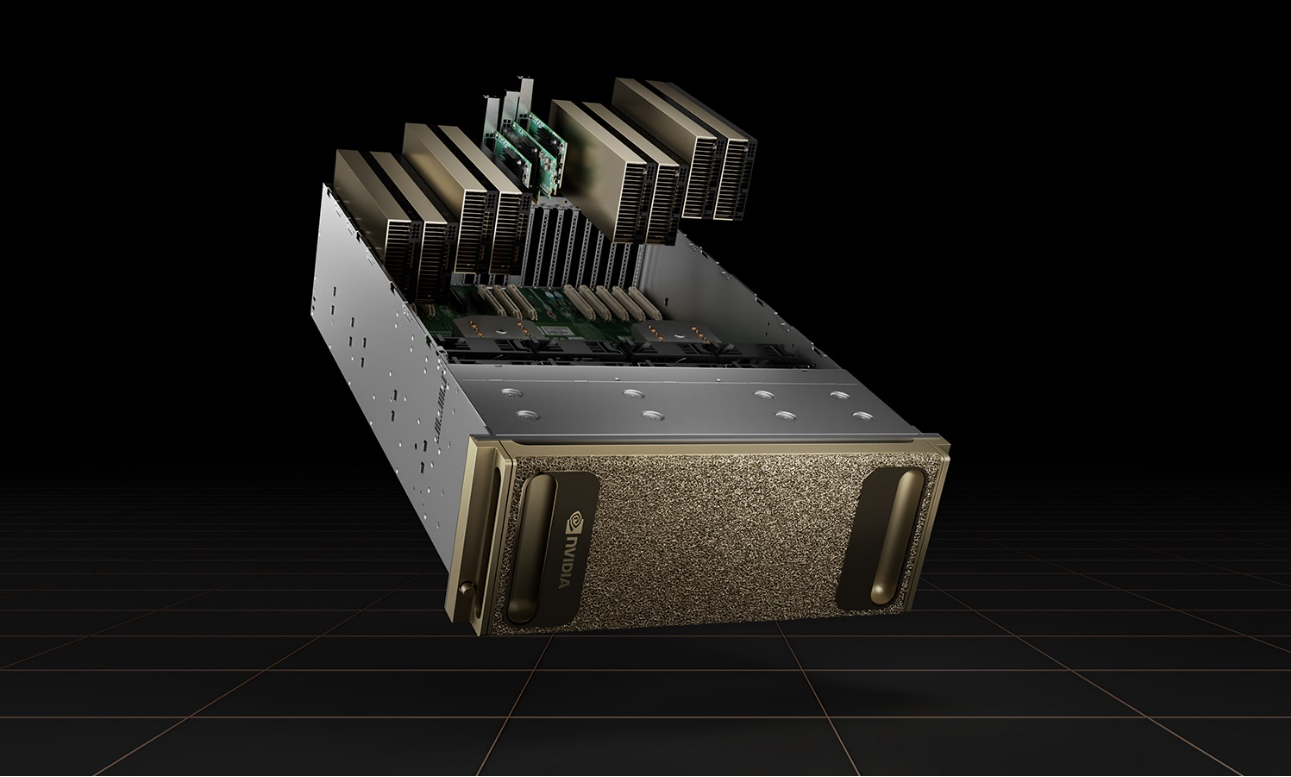

Platforms like NVIDIA Omniverse now let operators run real-time, physics-accurate simulations of cooling, networking, and power systems, synchronized with live telemetry from the facility. Using NVIDIA’s OVX computing systems, teams can build digital replicas of entire data halls where airflow, temperature, and failure scenarios are simulated to reflect real-world behaviour.

On the software front, Siemens is pushing this further with its Teamcenter Digital Reality Viewer, which integrates Omniverse real-time ray tracing into its digital twin stack. That fusion enables photorealistic, physics-based visualization of operational infrastructure, helping engineers validate design changes and catch issues before they occur.

This intelligence layer goes beyond visualization; digital twins are now being used to predict maintenance, optimize energy, and simulate operational changes without risk.

For instance, engineers can “rewind” or fast-forward scenarios, testing cooling strategies or simulating power spikes in a safe virtual space. Omniverse’s integration with AI-based physics (via frameworks like Modulus) makes it possible to run “what-if” models in near real time.

The improvements in simulation fidelity and AI-assisted modeling are easier to understand when visualized over time, as shown in the chart below.

Growth in Digital Twin Simulation Fidelity / Compute Power (Conceptual)

.jpg)

These advances are opening the door to autonomous operations, digital twins that not only reflect but also respond. As system workloads and physical infrastructure evolve rapidly, real-time simulation will become a central pillar of operations, not just a design tool.

How Are Leading Vendors Bringing Digital Twins Into Live Operations?

Vendors are not just prototyping digital twins; they’re embedding them into real, mission-critical operations.

Siemens, for instance, has integrated its digital-twin platform with real-time building-management systems, enabling operations teams to visualize and intervene in cooling and power subsystems using physics-accurate models. Their Teamcenter Digital Reality tool works hand-in-hand with Omniverse to run live simulations of potential failure events before they occur.

Nvidia has partnered with hyperscalers and OEMs to deploy Omniverse-powered twins in data halls. These twins continuously ingest telemetry from sensors, run predictive simulations, and alert engineers about potential bifurcations, like a sudden spike in airflow or power draw, that might affect performance or risk.

Schneider Electric is also driving adoption at scale. Its EcoStruxure platform now includes a “Digital Twin as a Service” offering, where modular data centers come with embedded digital models that monitor and simulate conditions in real time. These models help operators experiment with power distribution or cooling changes without ever touching hardware

Meanwhile, cloud providers like Microsoft are using their own twins for commissioning and lifecycle planning. Their digital replicas of power and thermal systems enable remote validation of infrastructure designs, helping accelerate deployments and reduce on-site risk.

By coordinating between factory-fabricated modules and real-time digital simulations, these vendors are turning digital twin technology from a theoretical benefit into a production-era standard.

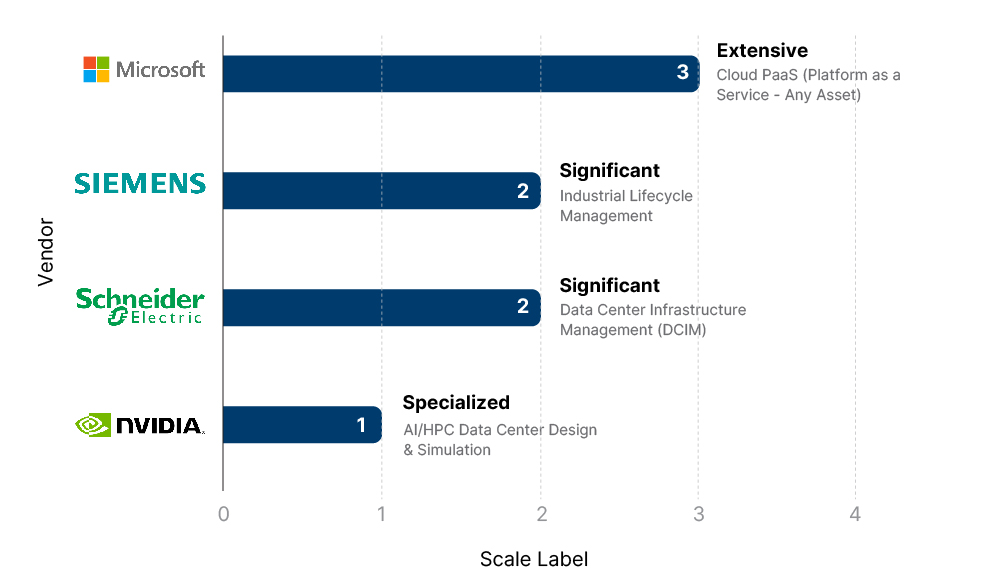

Comparative scale chart showing how each major vendor applies digital twins across its ecosystem, from Microsoft’s cloud-scale twins to Siemens’ industrial lifecycle models and Schneider Electric’s infrastructure-focused twins.

Conceptual Scale & Focus of Digital Twin Deployment by Vendor

What Happens When Digital Twins Become Standard?

Digital twins are moving from optional overlays to operational infrastructure, and the next few years will determine how deeply they shape the industry. As AI density continues to climb and data centers push closer to thermal and electrical limits, the pressure to run facilities with near-real-time intelligence will only increase.

Twins won’t just be for commissioning or design validation; they’ll become the interface through which operators understand capacity, resilience and risk.

The near-term shift will be subtle but decisive. Operators already leaning on twins for airflow and power modeling will extend them into capacity planning, energy optimization, and predictive maintenance. The real change comes when these models begin feeding into automated controls, tuning cooling setpoints, reallocating workloads, and managing power distribution at speeds beyond human response. That transition will divide operators who can sustain AI-era densities from those who are constrained by manual or reactive processes.

The long-term outlook points toward twins that operate continuously, updating from streaming telemetry, integrating with prefab modules, and guiding every expansion or retrofit. For builders, this means future data centers may be commissioned as much in software as in concrete and steel. For operators, it means risk reduces, uptime improves, and every decision sits on a model that reflects the actual state of the facility, not a static blueprint.

Digital twins won’t replace good engineering, but they will redefine what “well-run” means. The operators who adapt early will move faster, build cleaner, and carry far fewer unknowns into every phase of their infrastructure lifecycle.