Efficiency Under Pressure

As data center workloads surge in thermal intensity, especially with AI accelerators and high-density GPUs, Power Usage Effectiveness (PUE) is undergoing a stress test. While traditionally viewed as a stable metric of infrastructure efficiency, PUE behaves unpredictably at elevated rack densities.

This statistical report draws on verified operational data, including liquid vs. air cooling performance and AI-driven energy control systems, to map how PUE responds to shifting rack power densities and cooling thresholds.

It explores how density alters the energy profile of a facility, identifies inflection points where cooling strategies begin to fail, and highlights how liquid cooling and intelligent orchestration stabilize performance.

Time-Series and Density-Based Performance Trends

Over the last decade, data centers have pursued aggressive consolidation. But the move toward ultra-dense racks, surpassing 80 to 100 kW per rack in AI clusters, has begun to disrupt efficiency assumptions. Air-cooled systems, once dominant, now show steep energy penalties beyond 30 kW, while liquid cooling solutions remain stable across density ranges.

Rack Density vs PUE (Air vs Liquid Cooling)

|

Rack Density (kW/rack) |

PUE (Air Cooling) |

PUE (Liquid Cooling) |

Notes |

|

10 |

~1.5 |

~1.2 |

Baseline efficiency for both technologies |

|

20 |

~1.6 |

~1.2 |

Air PUE begins to rise slightly |

|

40 |

~1.8 |

~1.2 |

Air PUE increases as density grows |

|

60 |

~2 |

~1.15 |

Air PUE spikes, liquid remains stable |

|

80 |

~2.3 |

~1.15 |

Air PUE continues to rise sharply |

|

100 |

~2.6 |

~1.15 |

Air PUE exceeds practical limits |

|

120 |

~2.9 |

~1.15 |

Air PUE spikes significantly |

1.png)

These figures align with real-world observations. Equinix trials using NVIDIA H100 systems report PUEs near 1.15 in liquid-cooled configurations. Meanwhile, facilities still reliant on air cooling experience thermal bottlenecks and unsustainable chiller loads past 60 kW per rack.

Variable Analysis: Cooling Efficiency and Density Thresholds

Air-cooled data centers generally perform efficiently up to ~20 kW per rack, maintaining Power Usage Effectiveness (PUE) values between 1.5-1.6, according to thermal engineering benchmarks from Schneider Electric. However, as density climbs to 40 kW and beyond, airflow turbulence, fan inefficiencies, and compressor loads cause PUE to rise sharply.

Facilities like NVIDIA’s Selene SuperPOD use direct liquid cooling (DLC) to mitigate this thermal stress. While Selene’s specific PUE remains undisclosed, comparable deployments, including Equinix trials with NVIDIA H100 servers, report stable PUE values of around 1.15, even at densities of 80–100 kW.

Direct-to-chip and immersion systems eliminate airflow limitations and scale linearly with thermal intensity, making them essential in next-generation AI and HPC infrastructure.

Cooling Efficiency by Density Threshold (Estimated Ranges)

|

Rack Density (kW) |

Air Cooling PUE Range |

Liquid Cooling PUE Range |

Notes |

|

≤ 20 |

1.5–1.6 |

1.2 |

Air performs adequately |

|

40 |

~1.8 |

~1.2 |

Air begins to degrade |

|

80 |

~2.3 |

~1.15 |

Liquid holds stable |

|

100 |

≥ 2.6 |

~1.15 |

Air exceeds feasible limits |

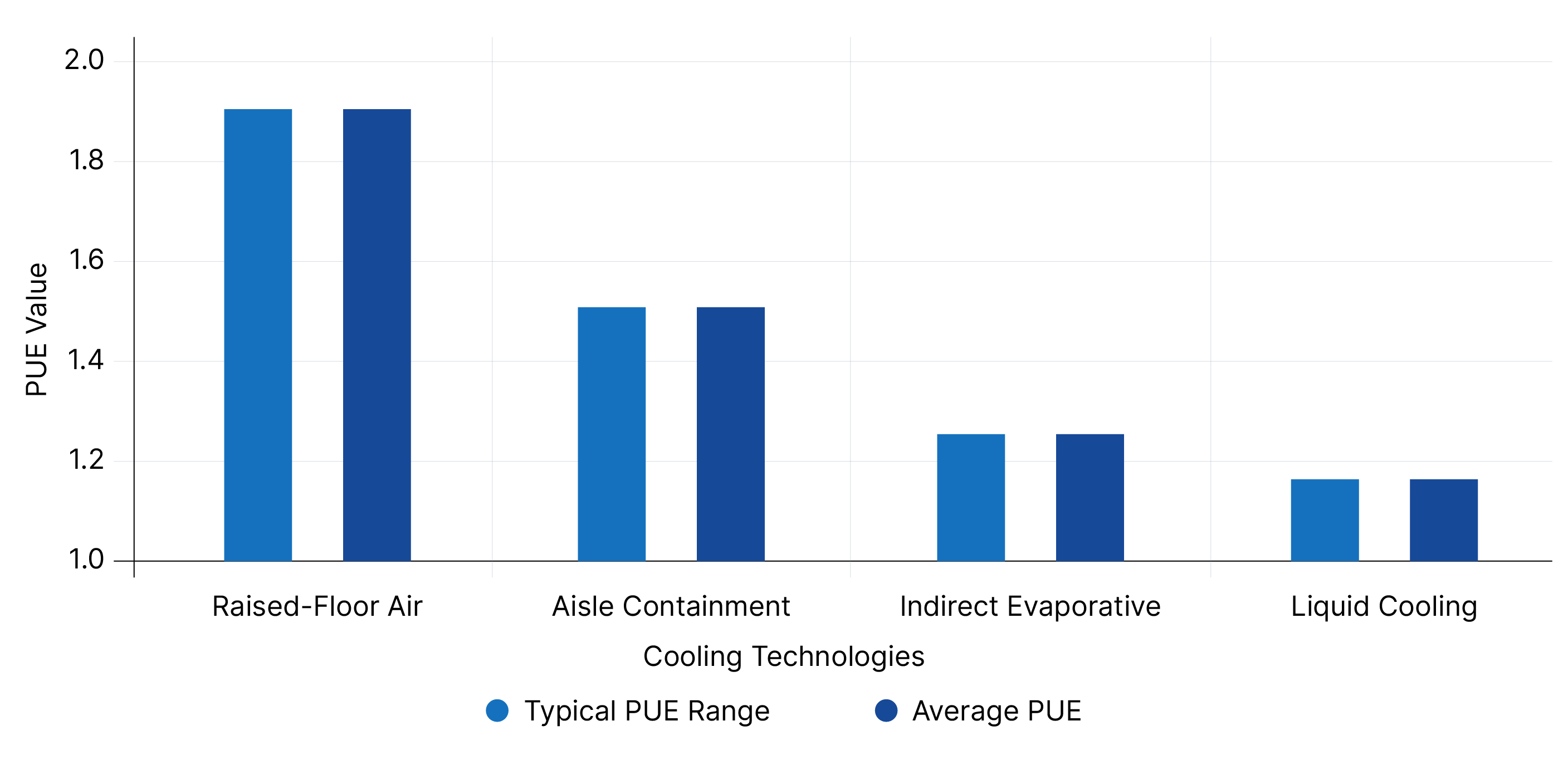

Cooling Technology Impact

The evolution from raised-floor air cooling to hot/cold aisle containment in the 2010s provided modest gains. Yet, even with indirect evaporative cooling, efficiency plateaus near 30 kW per rack.

Microsoft’s Quincy, Washington facility illustrates this peak: its use of air-side economization and indirect cooling achieves 1.12–1.20 PUE, validated through both internal audits and external disclosures.

Cooling Method vs Average PUE

|

Cooling Technology |

Typical PUE Range |

Average PUE |

|

Raised-Floor Air |

~1.8–2.0 |

~1.9 |

|

Aisle Containment |

~1.4–1.6 |

~1.5 |

|

Indirect Evaporative |

~1.2–1.3 |

~1.25 |

|

Liquid Cooling (DLC/Imm) |

~1.15–1.2 |

~1.15 |

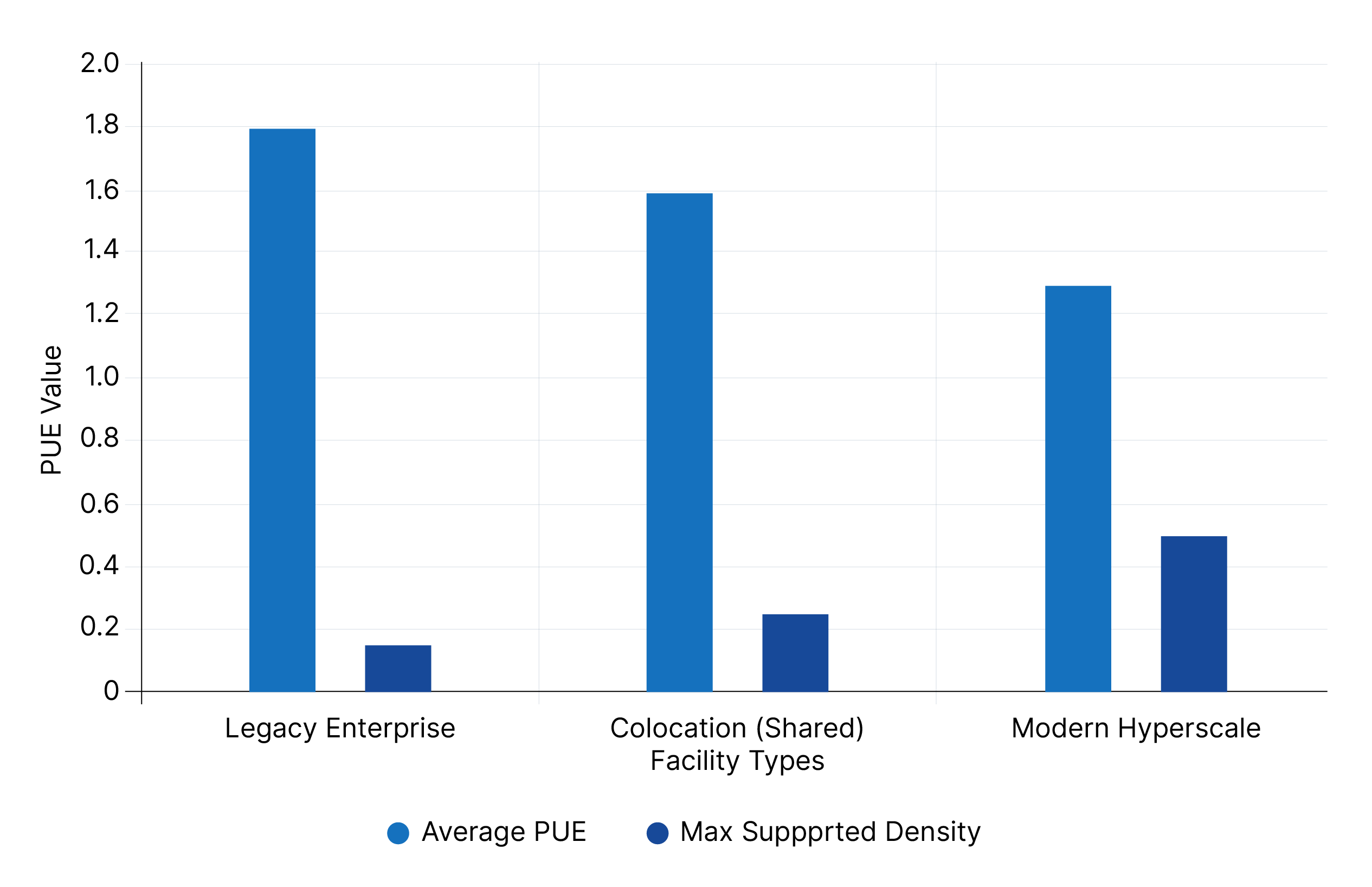

Regional and Infrastructure Constraints

Older enterprise and colocation environments often cannot capitalize on density-led efficiency. Factors like raised-floor layouts, legacy chillers, and inadequate containment create architectural barriers. Even moderate density increases in these settings lead to escalating cooling overhead.

Retrofitting legacy facilities for high-density workloads is not trivial. Redesigning airflow paths, installing supplemental cooling, or migrating to liquid-cooled zones incurs cost and operational complexity, often without achieving sub-1.5 PUE outcomes.

|

Facility Type |

Average PUE |

Max Supported Density (Air) |

Notes |

|

Legacy Enterprise |

~1.8 |

~10–20 kW |

Raised-floor, aging chillers |

|

Colocation (Shared) |

~1.6 |

~20–30 kW |

Partial containment |

|

Modern Hyperscale |

~1.3 |

40–100 kW+ |

Liquid-ready, zone-optimized |

Intelligent Orchestration as a Performance Multiplier

High-density facilities increasingly rely on AI-driven thermal management. Reinforcement learning agents optimize airflow, setpoints, and water use in real time.

AI-Driven Cooling Efficiency Gains (Meta, DeepMind)

|

Deployment Site |

Fan Energy Savings (%) |

Cooling Energy Savings (%) |

Water Savings (%) |

|

Meta Pilot |

20 |

- |

4 |

|

DeepMind/Trane |

- |

9-13 |

- |

.png)

Meta’s RL-based controls reduce supply fan energy by ~20%, with ~4% water savings across varied climate zones. DeepMind's collaboration with Trane Technologies yielded 9-13% cooling energy reductions in live enterprise-scale trials.

These platforms go beyond reactive control. When integrated with high-density racks and liquid systems, they dynamically adjust setpoints and airflow, dampening thermal spikes and optimizing every watt consumed.

Limitations and Scaling Challenges

While liquid cooling and intelligent control unlock density-led efficiency, adoption remains uneven. High CapEx, fluid compatibility concerns, and retrofitting constraints limit broad deployment.

Furthermore, regional climate differences affect how well evaporative or free cooling can support high-density environments.

The Future of Density-Efficiency Alignment

PUE, once considered a static metric, now reflects a dynamic interplay of density, cooling technology, and control intelligence. Efficiency does not degrade with density; it degrades with mismatched infrastructure.

For next-gen AI and HPC workloads, the only sustainable path forward pairs high-density racks with engineered thermal architectures and AI-enabled optimization.

1.png)