For years, data center operations have lived in a reactive world. Sensors report, alarms trigger, and teams respond. Temperature spikes, power anomalies, or equipment failures are addressed after they appear, not before. This model has kept facilities running, but it has also locked operators into a constant cycle of observation and response.

Artificial intelligence is beginning to change that rhythm. As operational data volumes grow and systems become more complex, AI is moving beyond basic monitoring toward anticipation, identifying patterns, forecasting failures, and optimizing performance ahead of time.

The shift from seeing problems to predicting them is subtle, but its implications for uptime, efficiency, and cost are significant. In modern data centers, the value of AI is no longer in watching systems closely but in knowing what will happen next.

Where AI Sit in Data Center Operations Today?

Today, AI’s role in most data centers is observational and assistive, not fully predictive. The dominant use case remains enhanced monitoring, with AI layered on top of traditional DCIM, BMS, and EMS platforms to make sense of rising telemetry volumes from power, cooling, and IT infrastructure.

Modern facilities generate millions of data points per day across temperature, humidity, airflow, power draw, vibration, and equipment status. AI models are increasingly used to detect anomalies, surface correlations humans miss, and reduce alert fatigue by prioritizing events that actually require intervention. This is a meaningful upgrade from static thresholds, but it still operates largely in a reactive mode.

Cooling optimization is one of the most mature AI-assisted domains today. Machine learning models analyze historical and real-time data to recommend setpoint adjustments that reduce energy use without risking thermal excursions. However, in many facilities these systems still require human approval before changes are applied, reflecting a cautious operational culture.

Predictive capabilities are emerging but unevenly deployed. Some operators are experimenting with AI-driven failure prediction for UPS systems, chillers, and switchgear, using pattern recognition across maintenance logs and sensor data. Industry surveys show interest is high, but confidence in fully autonomous decision-making remains limited.

Hyperscalers represent the leading edge. Google has publicly documented the use of AI to optimize cooling efficiency across its data center fleet, demonstrating measurable reductions in energy consumption through continuous learning systems.

Taken together, the current landscape shows AI firmly embedded in visibility and optimization, while prediction and autonomy remain the next frontier rather than the norm.

What Infrastructure Innovations Are Powering the Shift Toward Prediction?

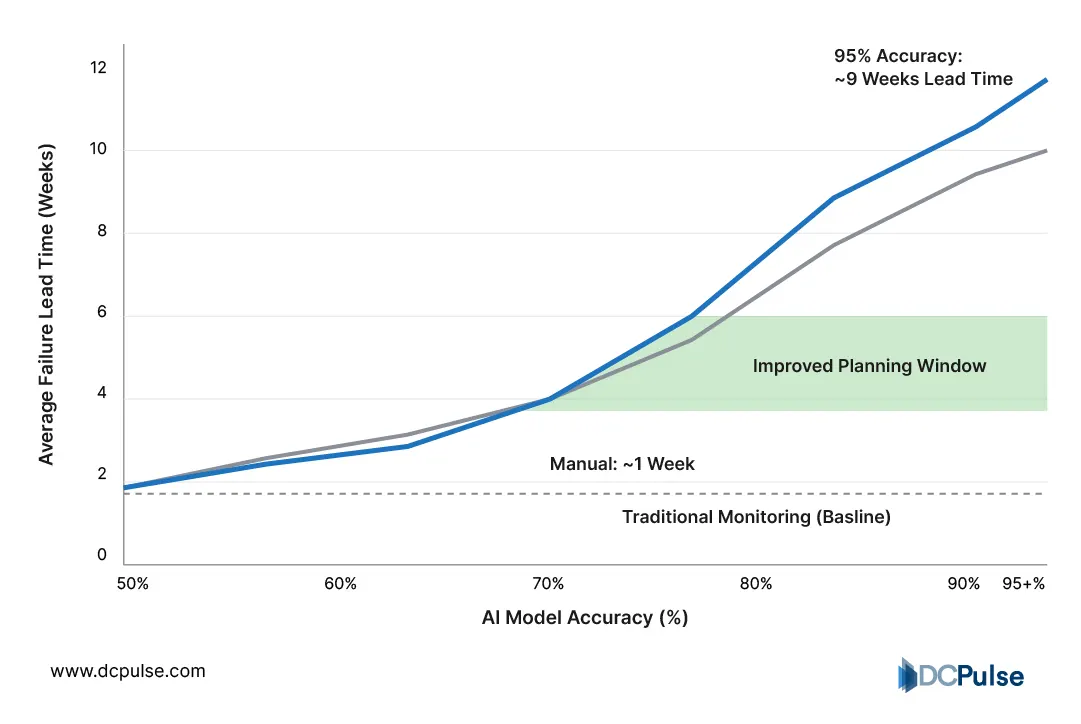

In data center operations, the shift from reactive monitoring to predictive insight is already underway, thanks to innovations that allow AI systems to digest sprawling telemetry and forecast issues before they impact uptime. One of the most mature domains is predictive maintenance, where machine learning analyzes historical performance and current sensor data, including temperature, vibration, and power use, to flag patterns that foreshadow failure. This gives operators a window to act days or even weeks ahead of disruptions, reducing unplanned outages and extending asset life in complex facilities.

AI Model Accuracy vs. Failure Lead Time (2026 Estimates)

AI is also enhancing anomaly detection across multiple data streams. Rather than reacting to individual sensor thresholds, modern platforms use machine learning to learn normal behavior and surface true anomalies, sudden cooling inefficiencies, or unusual power draw, with far fewer false positives. This shift not only alerts engineers earlier but also helps prioritize which issues actually matter.

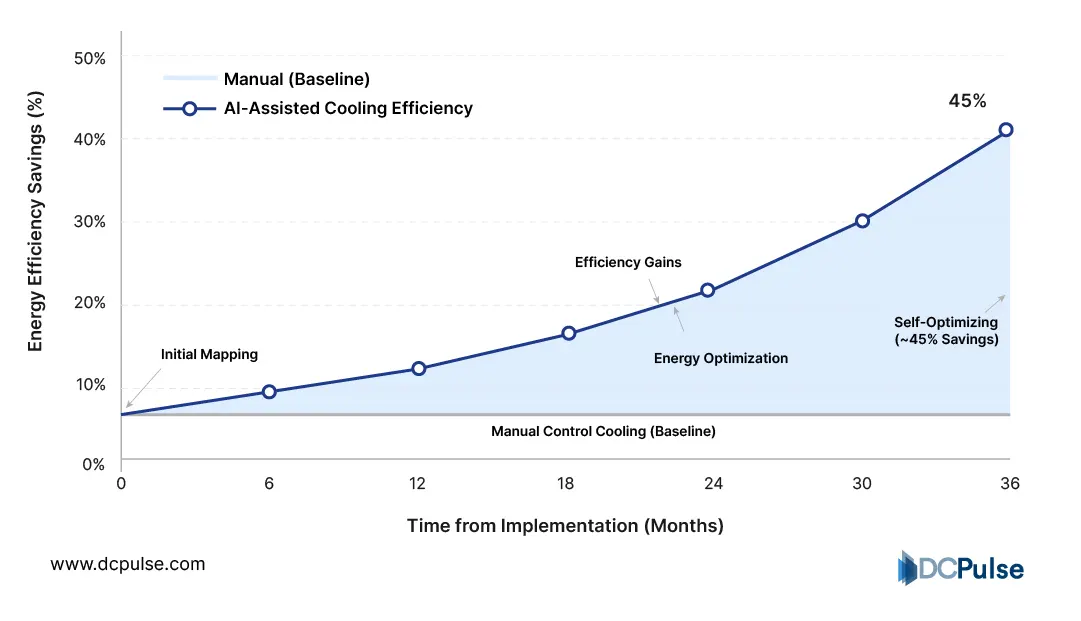

Another rising innovation is AI-assisted energy and cooling control. Instead of human operators manually adjusting setpoints, machine learning models continuously evaluate environmental and workload data to suggest or implement changes that optimize energy use without risking thermal excursions. With energy costs and emissions under pressure, this capability is now a strategic priority.

Manual Control vs. AI-Assisted Cooling Efficiency

Collectively, these innovations signal an important transition. AI is no longer just helping engineers see what’s happening; it is giving them foresight and direction, turning raw telemetry into actionable predictions that reshape how data centers operate at scale.

Who’s Actually Implementing AI in Data Center Operations

Across the data center ecosystem, operators and vendors aren’t just talking about AI; they’re building and deploying it in ways that reshape how infrastructure is managed, optimized, and scaled.

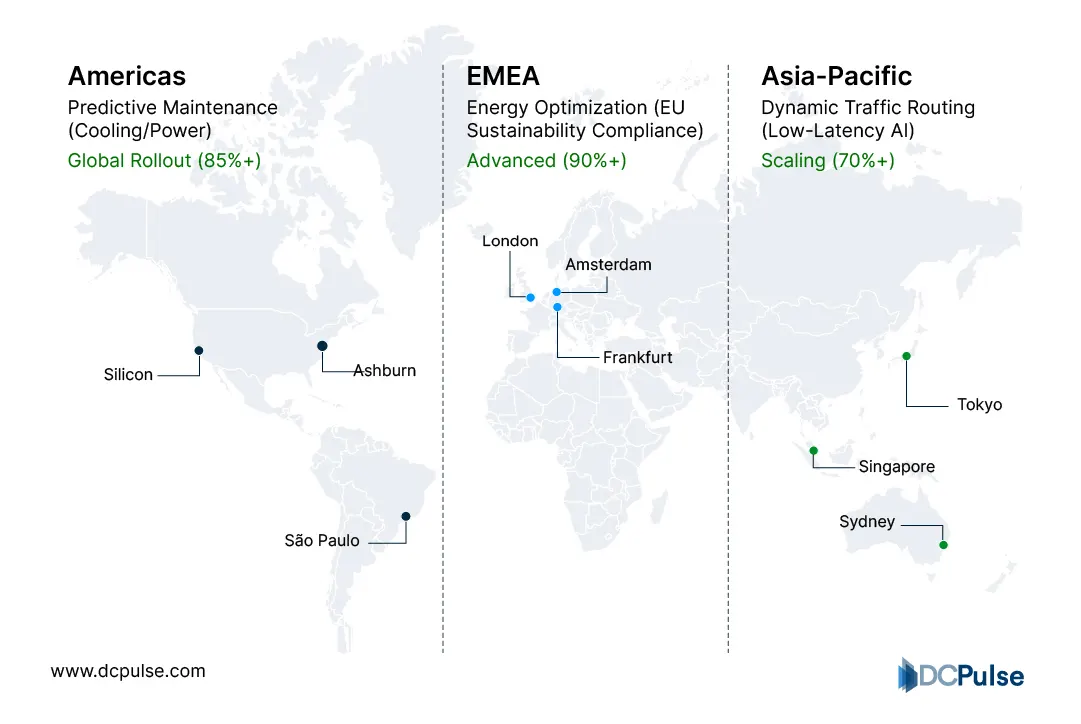

Equinix has been one of the most visible adopters of AI for predictive operations at scale. The company uses machine learning and digital twins, virtual representations of physical facilities, to monitor equipment health, anticipate failures, and optimize environmental conditions across its global IBX campuses.

By correlating sensor data with performance models, Equinix aims to reduce downtime and energy waste while steering its facilities toward 99.999% uptime reliability and greater operational efficiency.

Equinix Global IBX Footprint & AI Operations (2026)

Google’s use of AI for cooling optimization remains a high-impact benchmark. Using DeepMind’s machine learning systems, Google’s data centers have learned how to adjust cooling parameters in real time by analyzing thousands of telemetry streams. In facilities such as its Singapore site, this has translated into measurable reductions in energy consumption and lower Power Usage Effectiveness, validating that AI-driven inference can yield quantifiable infrastructure benefits.

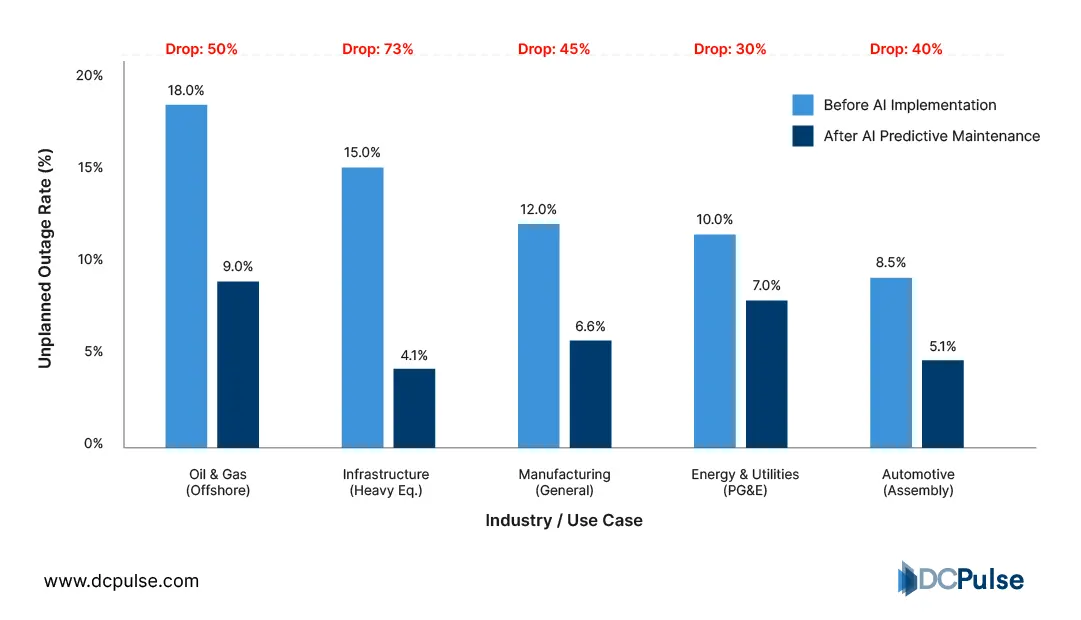

Meanwhile, predictive maintenance platforms are moving from prototype to production in hybrid and colocation environments. Industry analysis points to implementations where algorithms analyze patterns in vibration, electrical harmonics, and component wear to forecast failures weeks ahead, enabling proactive repairs and reducing unplanned outages. These systems often integrate with existing DCIM and AIOps stacks to give operators early warning rather than reactive alerting.

Unplanned Outage Rates Before vs. After AI Implementation

On the vendor side, companies like Vertiv are explicitly investing in AI-driven operational platforms. With the acquisition of software firms focused on generative AI for power and cooling optimization, Vertiv’s strategy reflects a broader industry recognition that predictive and prescriptive automation, not just monitoring, will be critical to managing complex, high-density facilities.

These moves show a clear industry trajectory: AI is transitioning from analytic augmentation to real-world operational impact, where prediction, optimization, and automation are shaping how data centers maintain performance and reduce risk at scale.

From Reactive Operations to Predictive Advantage

AI’s role in data center operations is no longer about adding intelligence on top of existing systems; it is about reshaping how facilities are run end to end. As monitoring gives way to prediction, operators are moving from reacting to alarms toward anticipating stress points across power, cooling, and compute infrastructure. This shift is becoming especially critical as higher rack densities, AI workloads, and tighter uptime expectations compress the margin for error.

Strategically, the next phase will favor operators that treat AI as operational infrastructure rather than a software layer. Facilities that integrate AI models directly into DCIM, BMS, and energy management systems will be better positioned to continuously optimize efficiency, not episodically. At the same time, governance, data quality, and model transparency will matter just as much as algorithmic sophistication, particularly in regulated or mission-critical environments.

Over the next few years, competitive advantage will increasingly come from operational foresight, knowing when assets will fail, where energy waste is emerging, and how workloads should be rebalanced before performance degrades. In that context, AI becomes less a tool for visibility and more a foundation for resilient, self-optimizing data center operations.