The world of esports is moving at lightning speed, literally. In competitive games, a single millisecond can separate victory from defeat. Yet, while millions of fans watch players compete on screens, few realize the massive technological infrastructure that makes it possible. From sprawling server farms to high-performance arenas, esports rely on infrastructure engineered for speed, power, and scale.

Global esports revenue surpassed USD 1.38 billion in 2025, with audiences topping 532 million viewers worldwide. Behind every live-streamed tournament, every seamless match, and every instant reaction, lies a network of edge servers, data centers, and cloud platforms working in perfect synchrony. Lag spikes, power outages, or insufficient capacity aren’t just technical glitches; they can decide championships and influence player careers.

As competitive gaming grows, the demands on infrastructure are intensifying. Top-tier titles like League of Legends, Counter-Strike: Global Offensive, and Valorant require ultra-low latency, reliable power, and the ability to support thousands of simultaneous players and viewers. These demands push the boundaries of traditional networking, server architecture, and energy management, creating a silent but critical arms race in gaming technology.

To understand how esports achieves this level of performance, we first need to look at the current state of its infrastructure, what works today, and where the challenges lie.

How Esports Infrastructure Handles Speed and Scale Today

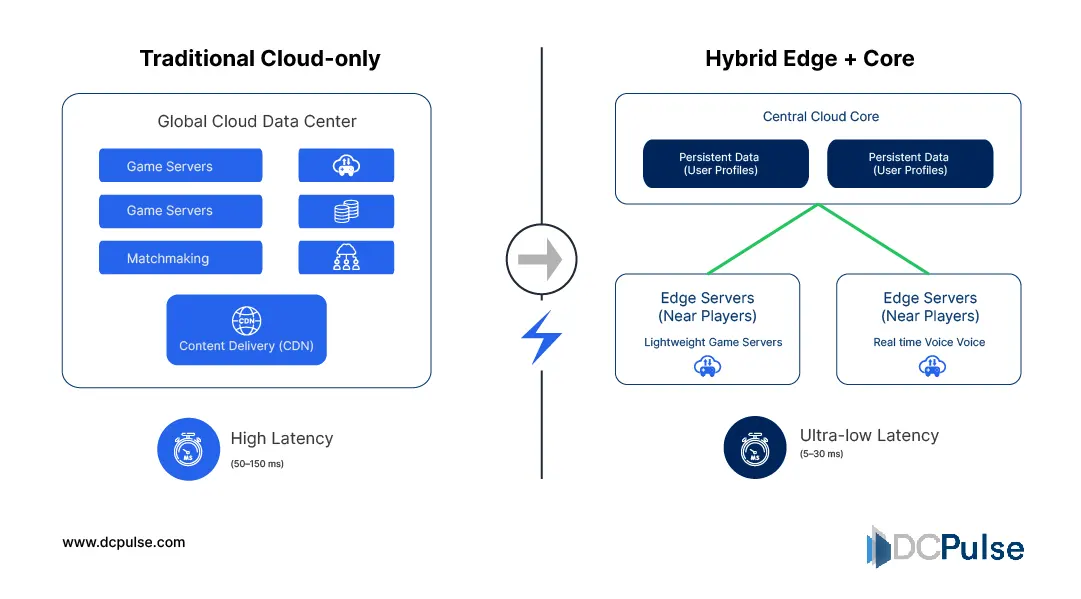

Esports isn’t just about players and pixels; it is about where and how game sessions are hosted, synchronized, and delivered across the globe. Most competitive gaming platforms operate on a mix of centralized cloud regions and distributed edge servers to balance scale with responsiveness.

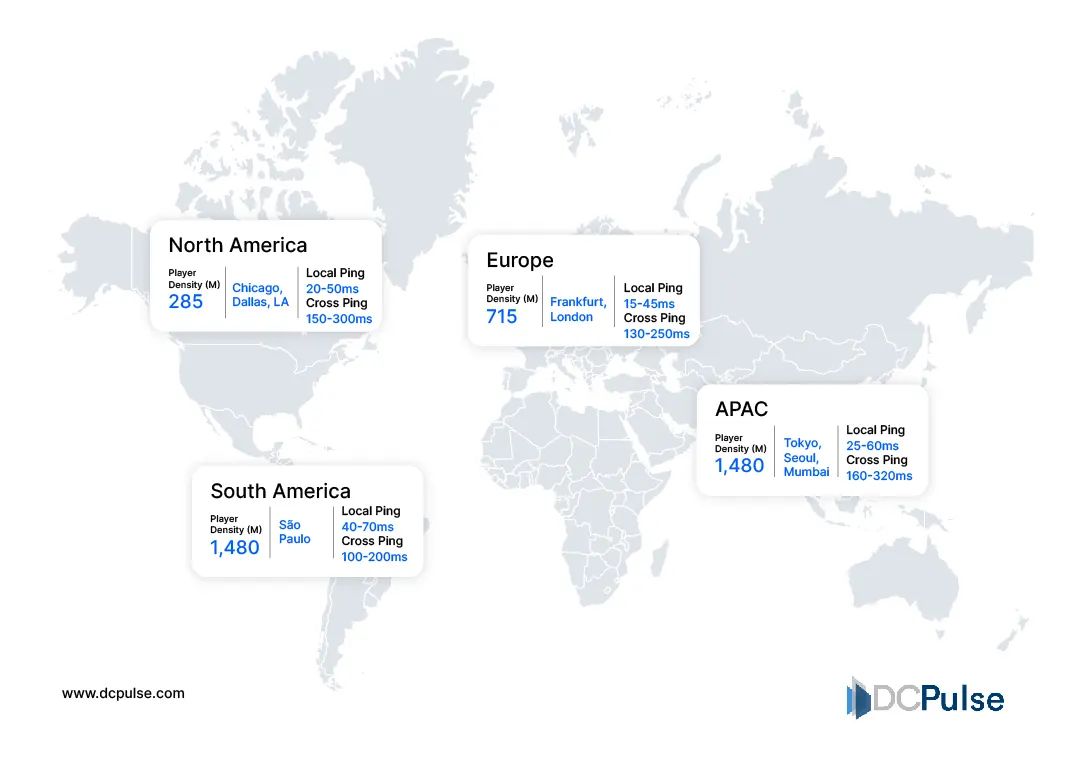

At the core of today’s esports infrastructure are regional game servers, often colocated in data centers near major population and player hubs. These servers handle critical tasks like match state, physics calculations, and synchronization for thousands of simultaneous users. Close proximity to players helps reduce round-trip latency, a decisive factor in competitive titles such as League of Legends and Valorant.

Global Game Server Latency Map (January 2026)

Beyond regionals, edge servers are increasingly used for caching and offloading non-interactive but latency-sensitive tasks such as asset loading, patch distribution, and spectator video feeds. By handling these workloads at the network edge, platforms reduce congestion toward core data centers and maintain smooth gameplay.

Game Service Architecture Comparison (2026)

Multiplayer esports also require synchronized state across servers with minimal divergence, an infrastructure challenge met with specialized networking and packet routing that prioritize stability over long distances.

In this landscape, edge infrastructure acts as a performance amplifier, enabling cloud expansion to meet the unique demands of global, competitive gaming.

How Edge and Data Infrastructure Are Evolving for Esports Performance

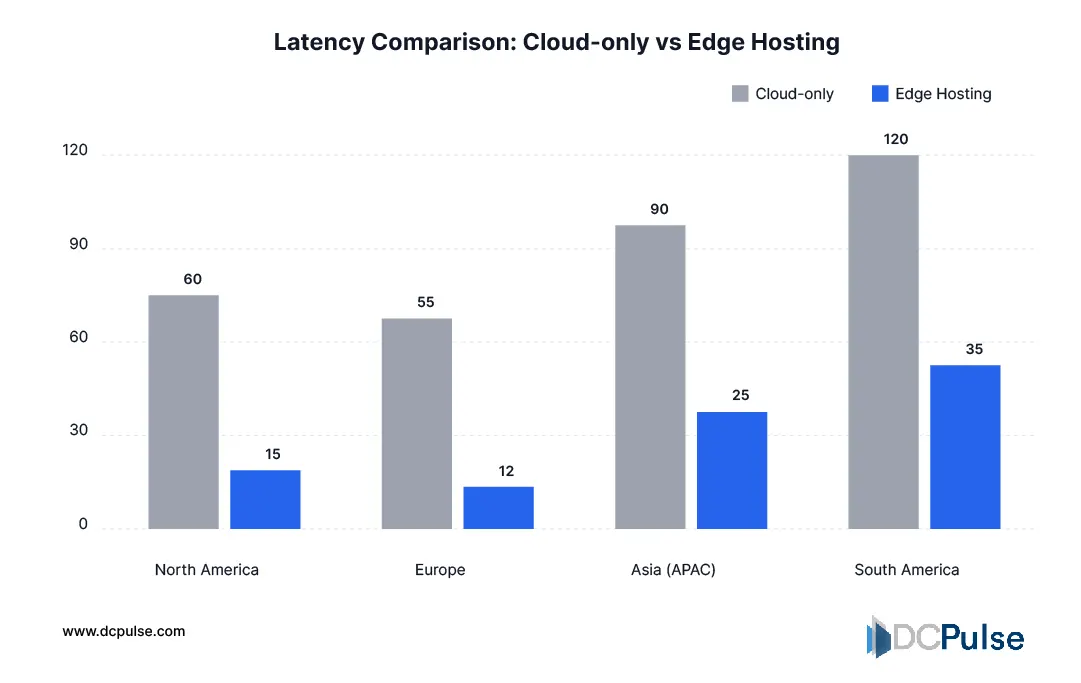

The demand for ultra-low latency, high concurrency, and seamless global play is pushing esports infrastructure beyond traditional models. One key innovation is the dynamic deployment of edge and regional game servers that host gameplay logic closer to participants. Instead of relying solely on distant centralized servers, edge nodes can be spun up in optimal locations in real time based on where players are located, significantly reducing round-trip times and jitter, improvements critical in competitive games where milliseconds matter. Platforms that orchestrate servers worldwide achieve measurable latency reductions by placing workloads closer to users, sometimes reducing ping times and packet travel distance dramatically.

Another emerging innovation is bare-metal and hybrid hosting at the edge. Instead of virtualized public cloud instances, providers deploy dedicated bare-metal edge servers that handle intense multiplayer logic, voice and chat processing, and regional matchmaking. This hardware-level performance yields more predictable responsiveness and better manages peak concurrency for live tournaments.

Optimized network routing and local caching at edge nodes further enhance reliability and interactive responsiveness. By processing critical interactions locally, such as movement updates, collision detection, and spectator state feeds, infrastructure designers can minimize lag and improve competitive fairness.

Latency Performance (Cloud-Only vs. Edge)

Together, these infrastructure innovations are enabling esports platforms to scale globally while keeping play fast, responsive, and consistent.

From Strategy to Footprint: What Large Esports Infrastructure Players Are Actually Building

As esports grows, infrastructure decisions are increasingly anchored in real deployment strategies rather than pilot experiments. Leading cloud and connectivity providers, gaming platforms, and infrastructure vendors are making concrete moves to expand edge deployments tailored for competitive gaming workloads.

One major trend is the push toward bare-metal edge infrastructure that supports latency-sensitive game servers and session persistence outside core cloud regions. Bare-metal servers at edge locations bring compute closer to players, delivering sub-20 ms ping times and smoother gameplay, a critical requirement for esports frames and real-time synchronization. These deployments offload central infrastructure by handling localized tasks such as voice, chat processing, and matchmaking logic.

Networking firms and edge platform providers, like Zenlayer, are expanding global edge networks that place edge compute and private backbone routes near major player hubs, reducing jitter and minimizing disconnections for high-concurrency events.

Finally, game publishers and cloud providers are integrating edge-oriented orchestration and regional deployment tools that automatically spin up edge resources during major tournaments or peak traffic, improving uptime and responsiveness with minimal manual intervention.

Together, these moves show that esports edge deployments are moving from proof-of-concept into strategic, production-grade infrastructure that supports both competitive play and global spectator experiences.

Where Esports Infrastructure Goes Next

As esports continues its shift from niche competition to global entertainment, infrastructure is becoming a defining competitive advantage rather than a background utility. The next phase will not be driven by bigger arenas or flashier broadcasts, but by how intelligently latency, resilience, and scalability are engineered into the stack.

Edge-heavy architectures will increasingly become the default, not just for tournaments but for ranked online play, enabling publishers to deliver consistent experiences across regions. Infrastructure planning will also tighten its alignment with game design itself, with studios optimizing engines, tick rates, and matchmaking systems around known network and compute constraints rather than theoretical best cases.

Power reliability and sustainability will move higher up the agenda. As esports venues and regional hubs draw hyperscale-level loads, operators will need to balance redundancy with efficiency, investing in smarter power distribution, localized backup strategies, and cleaner energy sourcing to meet both uptime and environmental expectations.

Strategically, the line between cloud providers, telecom operators, and game publishers will continue to blur. Partnerships will deepen, as no single player can optimize the full chain alone. For esports, staying fast and reliable is no longer just a technical requirement; it’s central to competitive integrity, audience trust, and the long-term credibility of the industry itself.