Cloud expansion used to be easy to visualize. More demand meant bigger regions, larger campuses, and more servers concentrated in fewer places. Scale was physical, centralized, and largely predictable.

That model is starting to strain, not because cloud demand is slowing, but because where that demand is coming from has changed. Users, applications, and data are spreading outward, closer to population centers, industrial zones, and network edges. Latency expectations are tighter. Data volumes are heavier. Network paths are more congested. And not every workload makes sense when it has to travel hundreds or thousands of kilometers to a core cloud region.

This is where edge infrastructure quietly enters the picture, not as a rival to hyperscale cloud and not as a futuristic concept, but as a practical response to expansion limits that centralization alone can no longer solve.

The edge is not about replacing the cloud. It is about extending it physically, geographically, and operationally so cloud platforms can continue to grow without breaking the performance, cost, and reliability assumptions they were built on.

That shift is already underway, and it is reshaping how cloud expansion is planned.

Where Centralised Cloud Starts to Stretch

Cloud expansion today is less constrained by server availability than by distance. Hyperscale regions continue to grow, but their physical separation from users, enterprises, and data sources introduces latency and network variability that modern applications increasingly struggle to tolerate. This is especially visible as cloud adoption deepens outside Tier-1 metros, where last-mile performance remains inconsistent despite strong backbone connectivity.

Cloud providers themselves acknowledge this shift. AWS, for example, positions its edge locations explicitly as extensions of its regional infrastructure, designed to bring services closer to end users while maintaining centralized control planes and consistency across regions.

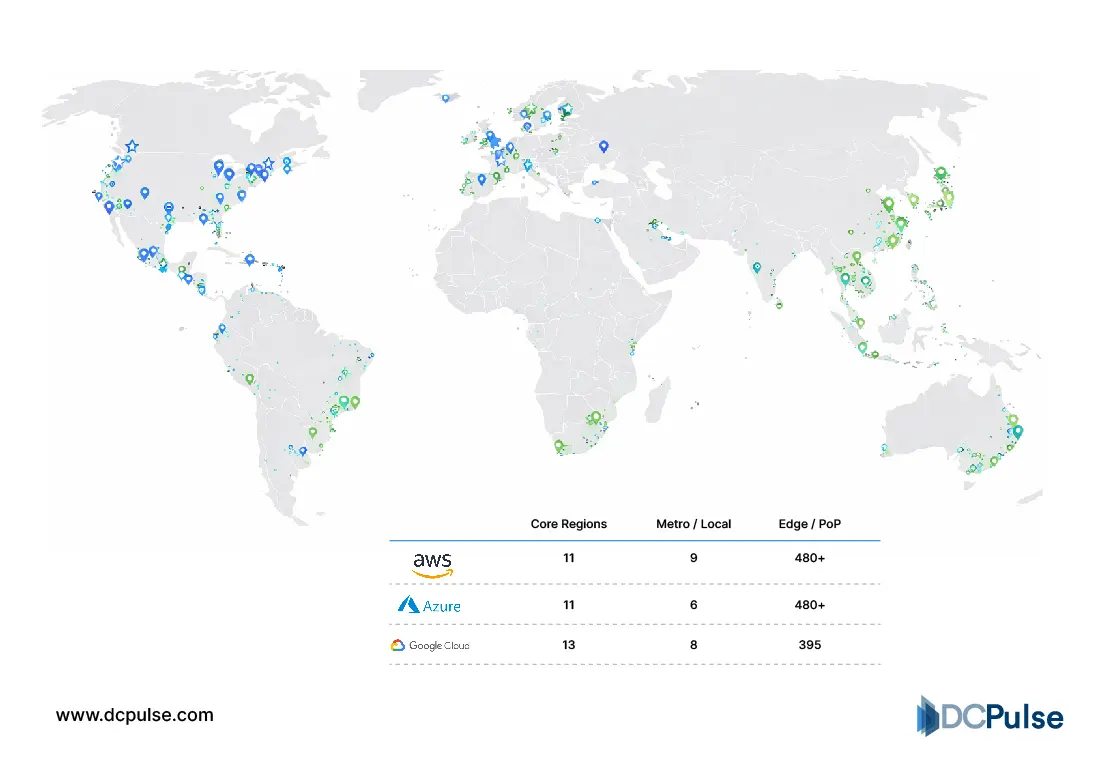

Global Cloud Infrastructure (2025)

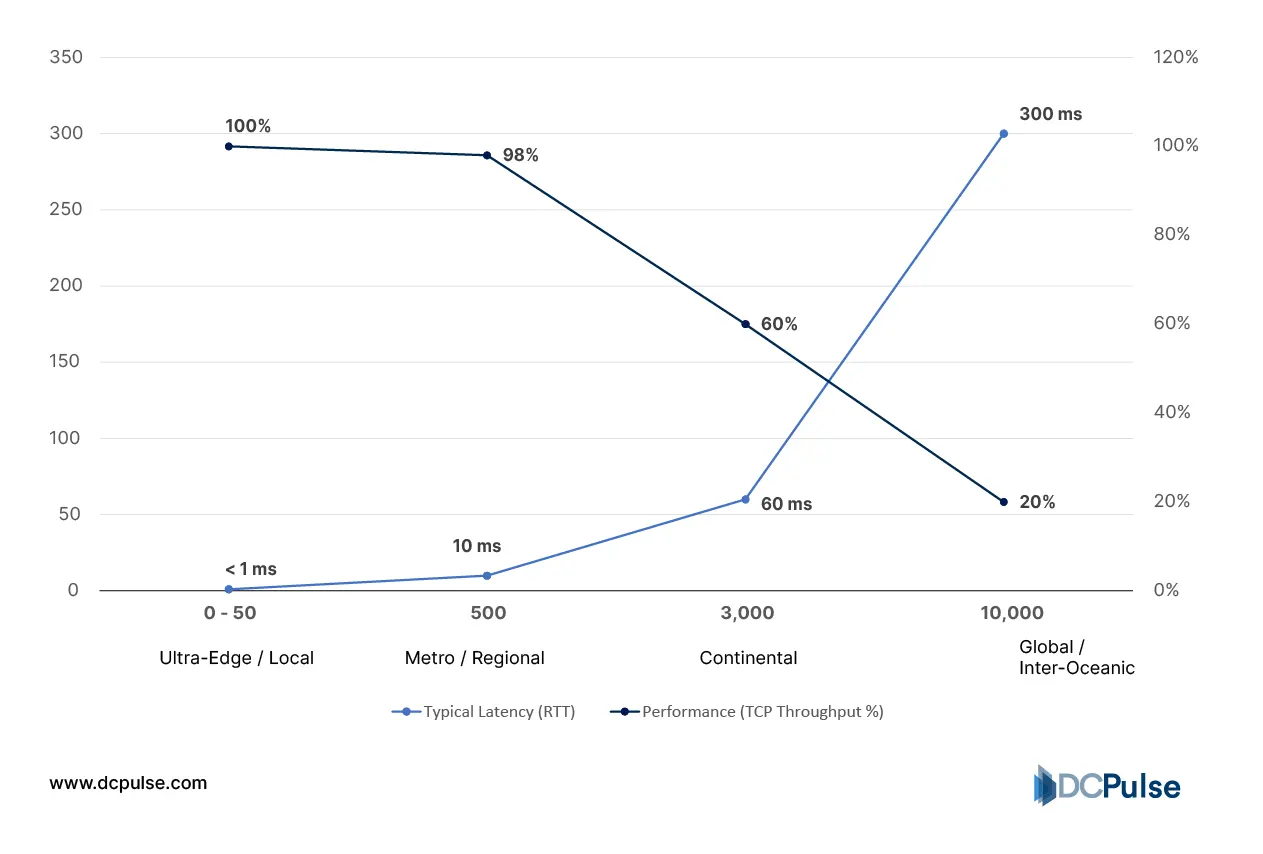

Latency is not just a user-experience issue; it becomes an architectural constraint. As Google Cloud notes in its edge and network design discussions, application responsiveness degrades sharply as physical distance increases, even on well-optimized networks.

Network Performance Decay: Latency vs. Distance

Data gravity compounds the problem. Large datasets tend to attract applications and services to where data is generated, making constant back-and-forth movement to distant regions inefficient. The concept, first articulated by Dave McCrory, remains relevant as data volumes grow at the network edge.

In this landscape, edge infrastructure emerges not as an alternative to centralized cloud but as a pressure-release layer, absorbing proximity-sensitive workloads so core regions can continue to scale efficiently.

New Design Patterns Shaping Edge Infrastructure for Cloud Growth

Edge infrastructure is no longer just improvised hardware at the network perimeter. Its design has matured into standardized, cloud-aligned systems that extend core cloud capabilities without fragmenting management or operations.

Cloud providers are building platforms that let edge deployments behave like extensions of central regions. Solutions such as AWS Outposts, Azure Stack Edge, and Google Distributed Cloud bring native cloud control, policy, and updates to distributed sites while retaining central orchestration and security consistency.

These frameworks reduce operational friction and make edge resources easier to integrate with core services and tooling.

On the infrastructure side, modular and prefabricated data center systems are gaining traction because they decrease deployment timeframes and standardize power/cooling stacks across sites. Schneider Electric’s EcoStruxure Modular Data Center portfolio delivers pre-engineered, quick-to-deploy compute modules that combine power, cooling, and racks into factory-tested units for rapid edge buildouts.

This approach helps cloud operators add capacity near users without custom construction at every location.

Vertiv’s prefabricated and modular solutions, including its growth-oriented platforms, similarly compress timelines and simplify edge buildout logistics while supporting scalable compute and thermal management capabilities at distributed sites. These designs reflect a broader trend toward repeatable, factory-configured infrastructure that cloud providers can commission steadily across markets.

These innovations don’t reinvent cloud architecture. They extend core cloud characteristics outward, standardization, centralized control, and rapid deployability, making edge infrastructure a reliable and scalable layer in cloud expansion strategies.

How Cloud Providers Are Extending Their Footprint to the Edge

Edge infrastructure is no longer experimental inside cloud strategies; it is being rolled out through deliberate, location-specific deployments by hyperscalers and their partners. Rather than building entirely new regional data centers, cloud providers are extending services into metro areas, carrier facilities, and enterprise-adjacent sites.

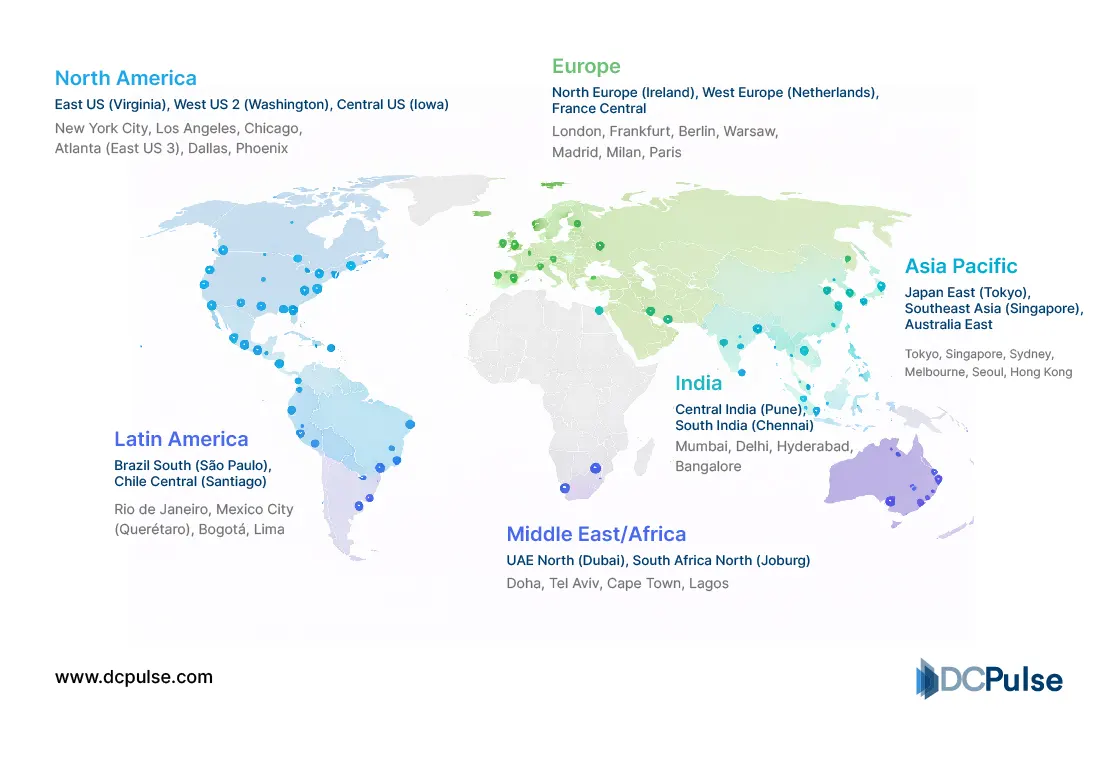

AWS has taken a layered approach. Alongside its core regions, the company continues to expand AWS Local Zones, which place select compute, storage, and networking services in major metropolitan areas to support latency-sensitive applications such as media processing and enterprise workloads

AWS Global Infrastructure – 2025

.webp)

Microsoft has followed a similar path, positioning Azure Edge Zones as a way to push cloud services closer to users while keeping orchestration tied to Azure’s central platform. These deployments are often aligned with dense population centers and network aggregation points, reinforcing the edge’s role in cloud reach rather than standalone compute, similar to how AWS Global Infrastructure extends core AWS regions through Local Zones and edge locations to maintain centralized control while improving proximity and performance.

Microsoft Azure Global Infrastructure - 2025

Colocation providers are also becoming critical enablers. Companies such as Equinix position their metro data centres as neutral interconnection hubs where cloud on-ramps, enterprises, and networks converge, effectively functioning as edge extensions of hyperscale clouds

Together, these moves show a consistent pattern: edge infrastructure is being deployed where it directly supports cloud service expansion, not as a parallel computing layer.

What Edge Means for the Next Phase of Cloud Growth

Edge infrastructure is settling into a clear, pragmatic role inside cloud expansion strategies. It is not becoming a parallel cloud, nor is it replacing large regional data centers. Instead, it is functioning as a capacity and proximity layer, absorbing latency-sensitive, bandwidth-heavy, or location-specific workloads so core regions can continue to scale efficiently.

For cloud providers, the implication is architectural discipline. Edge deployments work best when they inherit the same operational models, security controls, and lifecycle management as central regions. Fragmented edge environments add cost and complexity; integrated ones extend reach without diluting control, a point reinforced by how hyperscalers continue to anchor edge services to central platforms such as AWS regions and Azure core infrastructure

For enterprises and operators, the takeaway is equally grounded. Edge infrastructure should be evaluated not as a technology bet, but as a placement decision, used where proximity measurably improves performance, resilience, or cost.

In that sense, edge is less about disruption and more about making cloud expansion sustainable at scale.