Streaming looks effortless on the surface. A video starts instantly, a live event scales to millions of viewers, and quality adjusts without a second thought. Behind that simplicity, however, sits one of the most demanding infrastructure workloads data centers have ever had to support.

Unlike traditional enterprise applications, streaming workloads are volatile by design. Demand spikes without warning, traffic patterns shift by the minute, and performance expectations leave little room for delay. A few seconds of buffering or a sudden drop in resolution is enough to lose viewers and, for platforms, revenue. This pressure has forced infrastructure planners to rethink long-held assumptions about capacity planning, network design, and compute placement.

Streaming workloads also blur the boundaries between compute, storage, and networking. Content must be cached close to users, encoded in multiple formats simultaneously, and delivered across highly distributed networks with consistent quality. Centralized architectures struggle under this load, pushing operators toward edge-heavy designs and highly automated scaling models.

To understand how streaming is reshaping infrastructure planning, it’s essential to look first at the current landscape and how data centers and networks are built today to keep streams fast, stable, and always on.

How Streaming Workloads Are Shaping Today’s Infrastructure

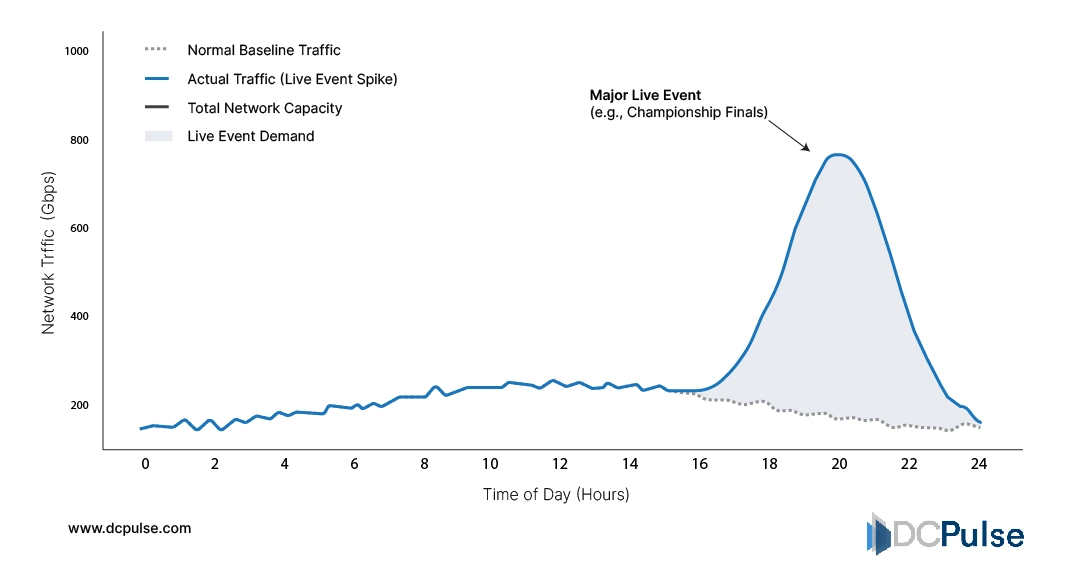

Today’s streaming infrastructure is built around one core reality: demand is unpredictable, but performance expectations are absolute. Whether it’s video-on-demand, live sports, or real-time gaming streams, workloads can surge from steady baselines to massive peaks in seconds. Traditional capacity planning models, built for stable, forecastable enterprise traffic, struggle under this behavior.

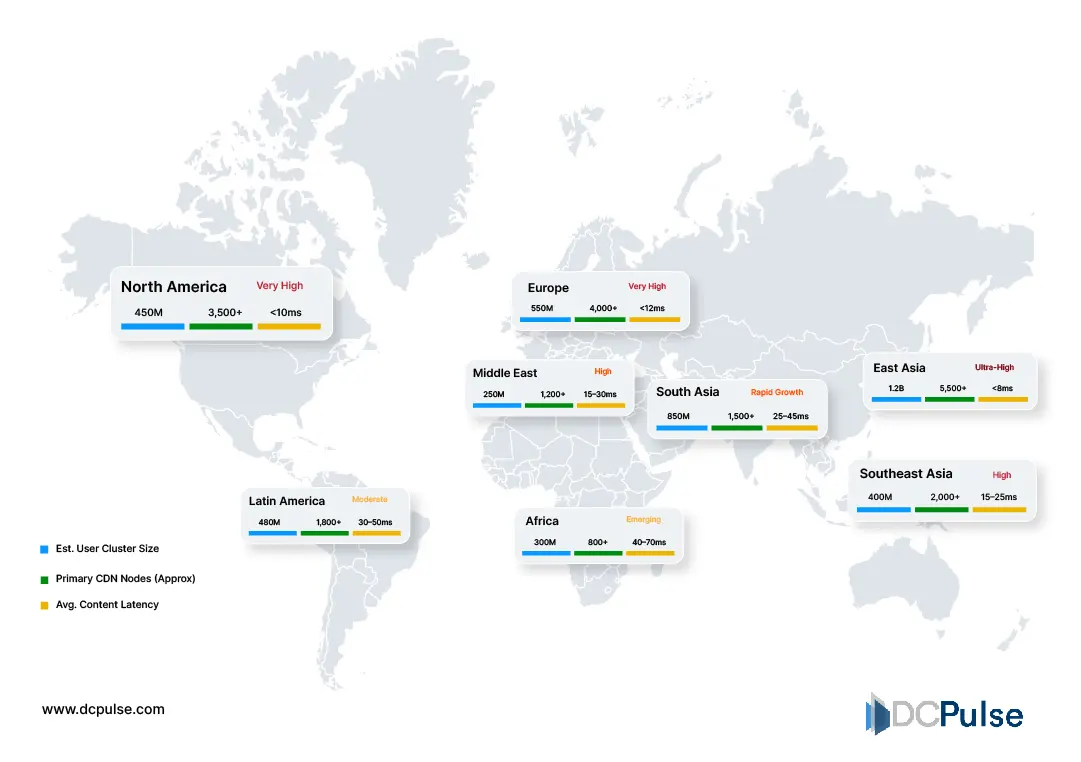

To cope, infrastructure planners rely heavily on distributed architectures. Content delivery networks (CDNs) cache video and audio assets closer to end users, reducing backbone congestion and minimizing latency. According to Akamai, more than 80% of global internet traffic now touches a CDN at some point, underscoring how central distributed delivery has become to streaming performance.

Inside data centers, streaming workloads drive high east-west traffic, intensive GPU usage for encoding and transcoding, and large-scale object storage for content libraries. Netflix has documented how its Open Connect infrastructure offloads traffic from centralized data centers by pushing content to edge locations worldwide, enabling consistent quality during peak viewing hours.

Global CDN & Edge Cache Distribution Clusters (2026)

Network design has also evolved. Streaming platforms prioritize high-throughput, low-loss networks over ultra-low latency, optimizing for sustained data flow rather than transactional speed (Cisco streaming traffic analysis).

Network Traffic Comparison (Baseline vs. Live Event Spike)

This landscape reflects a shift toward infrastructure that is elastic, distributed, and designed for constant fluctuation, setting the stage for deeper innovation.

How Streaming Infrastructure Is Being Re-Engineered

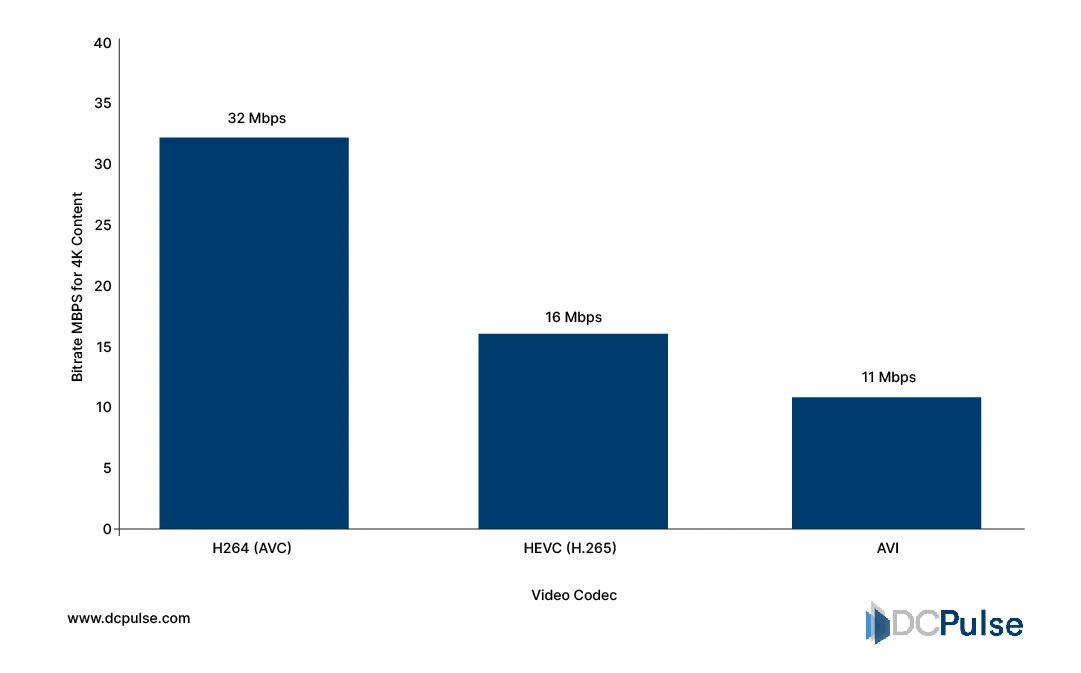

As streaming volumes grow and viewing patterns become more erratic, innovation is increasingly focused on efficiency at scale, not just raw capacity. One major shift is happening at the codec layer. Platforms are moving toward next-generation video codecs like AV1, which deliver the same visual quality at significantly lower bitrates.

Netflix has publicly detailed its deployment of AV1 for streaming, noting material bandwidth savings that directly reduce network strain during peak demand. The Alliance for Open Media positions AV1 as a long-term standard for scalable streaming delivery.

Video Codec Bitrate Efficiency Comparison - H.264, HEVC, and AV1- (2026)

Innovation is also moving deeper into the network. Edge compute is no longer limited to caching; it is increasingly used for real-time packaging, ad insertion, and stream personalization closer to viewers. Akamai highlights how edge processing reduces round-trip latency and stabilizes performance during live events by minimizing origin.

Inside data centers, streaming workloads are driving specialized hardware adoption, particularly GPUs and dedicated video accelerators for encoding and transcoding. NVIDIA notes that hardware-accelerated video processing allows operators to scale streams more efficiently without linear increases in power and rack footprint.

Together, these innovations are redefining how infrastructure is planned, optimizing for elasticity, efficiency, and sustained throughput rather than static peak provisioning.

How Streaming Giants Are Rewiring Infrastructure at Scale

Major streaming platforms and infrastructure providers aren’t just theorizing about edge and scale; they’re executing. Their moves are redefining how data centers and networks are planned to support massive, unpredictable workloads.

Akamai’s SOTI (State of the Internet/Security) reports have consistently shown that streaming constitutes a plurality of global internet traffic and that distributed delivery and edge caching are essential to absorb traffic while maintaining performance. Akamai’s infrastructure spans thousands of edge nodes that cache and serve video close to users, reducing backbone loads and improving QoE (quality of experience).

Netflix’s Open Connect initiative is another concrete move. By placing dedicated cache servers inside ISP networks worldwide and strategically locating its content close to viewers, Netflix offloads traffic from centralized core facilities and reduces congestion during peak hours, an infrastructure footprint decision rooted in streaming traffic patterns.

Cloud providers themselves are embedding streaming-centric services into regional infrastructure expansion. AWS’s CloudFront and Media Services are designed for high-throughput video delivery with global edge points of presence, enabling streaming platforms to scale delivery without building proprietary networks.

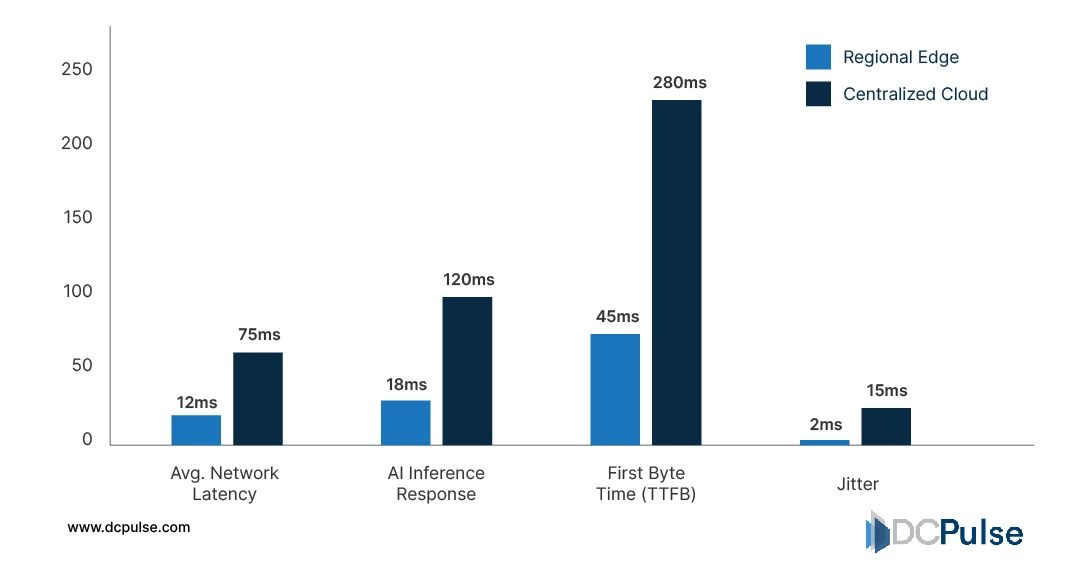

Data Delivery Performance Comparison (2026 Benchmarks)

These industry moves show that infrastructure planning for streaming workloads is no longer reactive. It is a strategic blueprint that informs where to build, cache, and compute in order to deliver reliable, high-quality streams at scale.

How Streaming Workloads Are Redefining Infrastructure Planning

Streaming workloads have permanently shifted how infrastructure is planned, moving operators away from steady-state capacity models toward architectures built for volatility. Unlike enterprise or transactional systems, streaming traffic is defined by sudden surges, live sports finals, global premieres, and breaking news, where demand can spike by multiples within minutes.

As a result, infrastructure strategies now prioritize elastic scaling, geographic proximity, and intelligent traffic steering over raw centralized capacity.

Cloud and edge providers are increasingly designing for peak concurrency, not average utilization. This means deeper integration between content delivery networks, regional edge compute, and core cloud platforms to absorb spikes without latency degradation or service failures. Power and network planning are also evolving, with operators provisioning redundant paths and burstable capacity rather than static headroom.

Looking ahead, infrastructure planning for streaming will hinge on predictive analytics, workload-aware orchestration, and tighter coupling between content platforms and infrastructure providers. Those that fail to design for variability risk not just performance issues but audience churn in an environment where reliability is inseparable from user experience.