AI regulation is often discussed as a legal or ethical problem. In practice, it is becoming something far more concrete. Every new rule about model accountability, data sovereignty, or algorithmic risk eventually lands not in code, but in infrastructure. In the power contracts that get approved. In the locations where data centers are allowed to scale. In the way networks are segmented, audited, and monitored.

Across regions, governments are beginning to treat AI not just as software, but as a strategic capability tied to physical capacity. Compute is no longer neutral. Where it sits, how it is powered, and who controls it are increasingly shaped by regulation written far away from server halls, yet enforced through them.

This is the quiet shift now underway. AI laws are being drafted to govern models and data, but their real-world impact is redefining infrastructure policy itself. And for data center operators, utilities, and planners, this regulatory gravity is becoming impossible to ignore.

Where Policy Starts Touching the Ground

AI regulation today is not a single global rulebook. It is a patchwork of regional frameworks, each written with different priorities, safety, sovereignty, competitiveness, but all of them quietly influencing where and how infrastructure gets built.

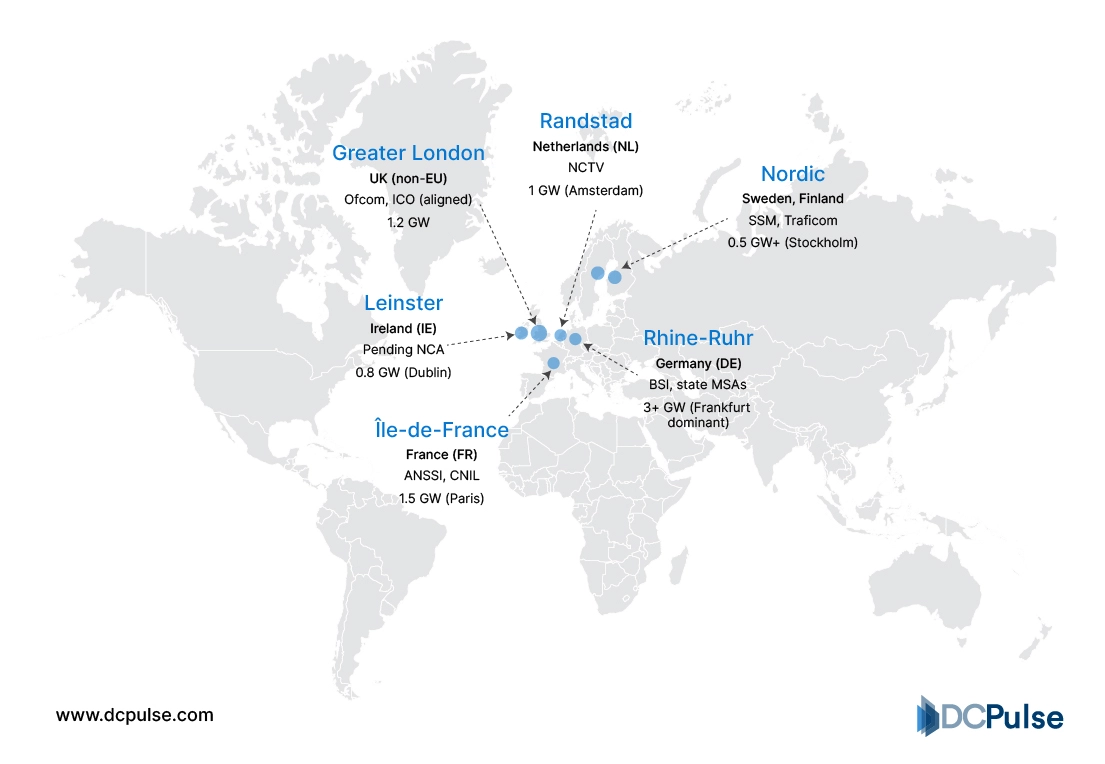

In Europe, the EU AI Act classifies AI systems by risk and places strict obligations on data governance, traceability, and oversight. While the law speaks the language of models and use cases, its downstream effect is unmistakable: operators are being pushed toward regionally bounded compute, auditable workloads, and clearer separation between regulated and non-regulated environments. For data centers, that translates into zoning, segmentation, and locality becoming compliance features, not design choices.

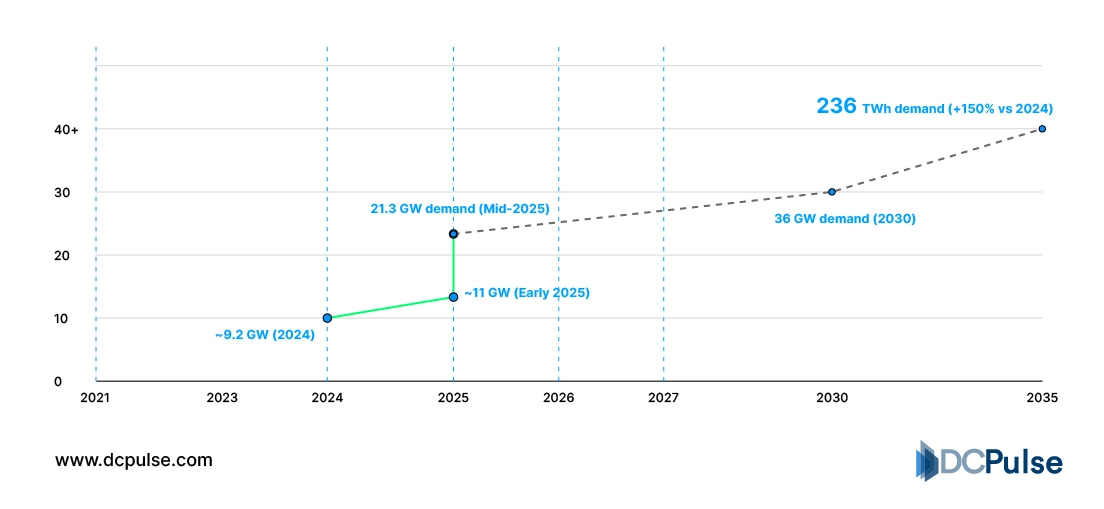

EU AI Act and Data Center Capacity Timeline

In the United States, regulation is more fragmented but no less influential. The White House Executive Order on Safe, Secure, and Trustworthy AI leans heavily on reporting, disclosure, and critical infrastructure protection, reinforcing the idea that large-scale compute is a strategic asset. Combined with sectoral rules and state-level energy and siting constraints, this has begun shaping where AI-heavy infrastructure can realistically scale.

China, meanwhile, takes a far more centralized approach. Its AI regulations tightly couple algorithm governance with data control and national infrastructure planning, leaving little separation between digital policy and physical deployment decisions.

Across Asia-Pacific, including India, policy signals are subtler but converging. India’s Digital Personal Data Protection Act and its push to attract data center investment are together shaping a future where compliance, energy sourcing, and air-quality realities intersect with AI growth ambitions.

Major Regulatory Regions Overlaid with AI-Driven Data Center Clusters

The pattern is clear; even without explicit infrastructure mandates, AI regulation is already steering infrastructure policy, indirectly, but decisively.

Infrastructure Learns to Comply

As AI regulation matures, infrastructure is no longer being shaped only by performance metrics like latency, density, or cost. It is increasingly being designed to prove compliance by default. What is emerging is not a new class of data centers, but a new operating logic layered into existing ones.

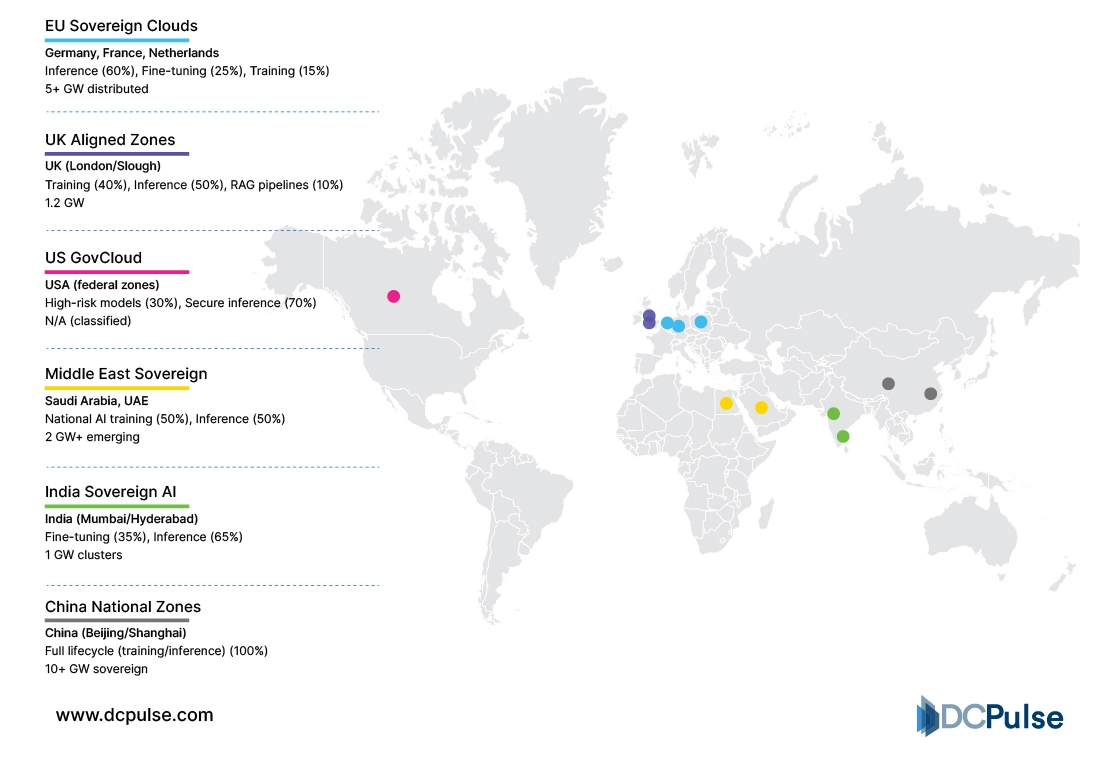

One of the clearest shifts is toward segmented and sovereign infrastructure zones. Rather than running AI workloads across shared, fluid pools of compute, operators are carving out dedicated environments tied to jurisdiction, risk classification, or sectoral use. These separations make it easier to meet regulatory expectations around traceability, data control, and audit readiness, requirements embedded in frameworks like the EU AI Act and mirrored in national guidelines elsewhere.

Sovereign AI Workloads Segmentation Across Infrastructure Zones

Auditability is becoming another defining design principle. Regulators are not asking data centers to explain models, but they are increasingly asking where models run, how data flows, and who has access. This is driving deeper integration of persistent logging, access controls, and infrastructure-level monitoring aligned with standards such as the NIST AI Risk Management Framework, which explicitly links governance to operational systems.

Energy use is also being pulled into the regulatory orbit. As AI workloads scale, governments are scrutinizing not just emissions, but grid impact and predictability. In response, operators are investing in energy-aware scheduling, regional load balancing, and tighter coordination with utilities, not as sustainability gestures, but as compliance safeguards in markets where power constraints can quickly become policy constraints.

AI Workload Growth vs. Grid Capacity Planning (2025–2030)

.webp)

None of these changes are radical technologies. They are architectural adjustments driven by regulatory pressure, quietly redefining how infrastructure is planned and operated. The message is subtle but firm; AI regulation does not need to mention data centers explicitly to reshape them. It already is.

When Policy Starts Moving Capital

As AI regulation tightens, infrastructure investment decisions are becoming visibly policy-aligned. What looks, on the surface, like routine expansion is increasingly driven by regulatory positioning rather than pure demand forecasting.

Hyperscalers have been among the earliest to adjust. Microsoft’s EU Data Boundary for the Microsoft Cloud is a clear example of infrastructure being reorganised to meet regional governance expectations, committing to keep customer data and processing within the EU for regulated workloads.

While framed as a privacy measure, it has direct implications for where capacity is built and how workloads are architected across European regions.

Google and AWS are following similar paths. Google’s sovereign cloud offerings and AWS’s announcement of a dedicated European Sovereign Cloud reflect a broader shift: separating regulated AI workloads from global platforms to satisfy compliance, auditability, and control requirements emerging from AI and data laws. These are not niche products; they represent structural changes in how infrastructure is deployed.

Governments, meanwhile, are becoming more directly involved. Across Europe and parts of Asia, public-sector backed data center initiatives are positioning sovereign compute as national infrastructure, on par with energy or telecommunications. These efforts are less about owning hardware and more about guaranteeing compliant capacity for regulated AI use cases in healthcare, finance, and public services.

Global AI Policy & Sovereign Investment (2024–2026)

.webp)

In emerging markets like India, the response is more nuanced. Policy frameworks encourage AI growth and data center investment simultaneously, but constraints around power availability, land use, and environmental compliance are beginning to shape where AI infrastructure can realistically scale. The result is a quieter form of policy influence, one that nudges capital toward specific regions, energy models, and deployment sizes without explicit AI mandates.

Across all regions, the signal is consistent. Infrastructure capital is flowing not just toward growth markets, but toward regulatory certainty. In an AI-driven world, policy alignment is becoming a prerequisite for long-term infrastructure viability.

Planning for Policy, Not Just Performance

As regulation around artificial intelligence takes firmer shape, the next frontier for data center strategy will be policy-aligned infrastructure planning, not just performance optimisation. Governments and enterprises alike now view compute and data as strategic assets, subject to legal, economic, and geopolitical constraints that ripple through siting, capacity, and operational choice.

For example, hyperscale providers are expanding sovereign cloud capabilities and localized processing to meet both regulatory compliance and customer demand for jurisdictional control of data and AI workloads.

Across Europe, regulatory emphasis on data sovereignty continues to drive differentiated infrastructure commitments, including plans to expand data center capacity by roughly 40 % and host AI services under local governance structures.

Data center professionals should treat AI regulation as an infrastructure design constraint, not a compliance afterthought. Planning must increasingly account for regional policies, sovereignty requirements, and auditability layers as core drivers of capacity, not peripheral concerns.

In this evolving environment, operators who integrate regulatory foresight into site selection, energy strategy, and customer offerings will gain a strategic edge, turning compliance pressures into predictable build-out pathways rather than ad-hoc responses.