Hyperscale data centers were built to grow wide, not dense.

For most of the past decade, expansion meant adding buildings, securing more land, and pulling in more megawatts. Power planning assumed margin. Cooling scaled predictably. Racks grew, but not explosively.

That model is now under strain.

AI workloads have changed the physics inside the data hall. Power demand no longer rises evenly across a facility; it spikes. A single cluster can redraw thermal maps, overwhelm electrical paths, and compress years of design assumptions into months. Space still exists, but usable power density does not.

What operators are confronting isn’t a lack of capacity in absolute terms. It’s the inability to concentrate power safely, repeatedly, and economically where modern computing demands it.

As hyperscale operators push toward denser architectures, power density optimization is no longer an efficiency exercise. It is becoming the defining constraint and opportunity of the next phase of data center design.

Where Density Breaks the Old Blueprint

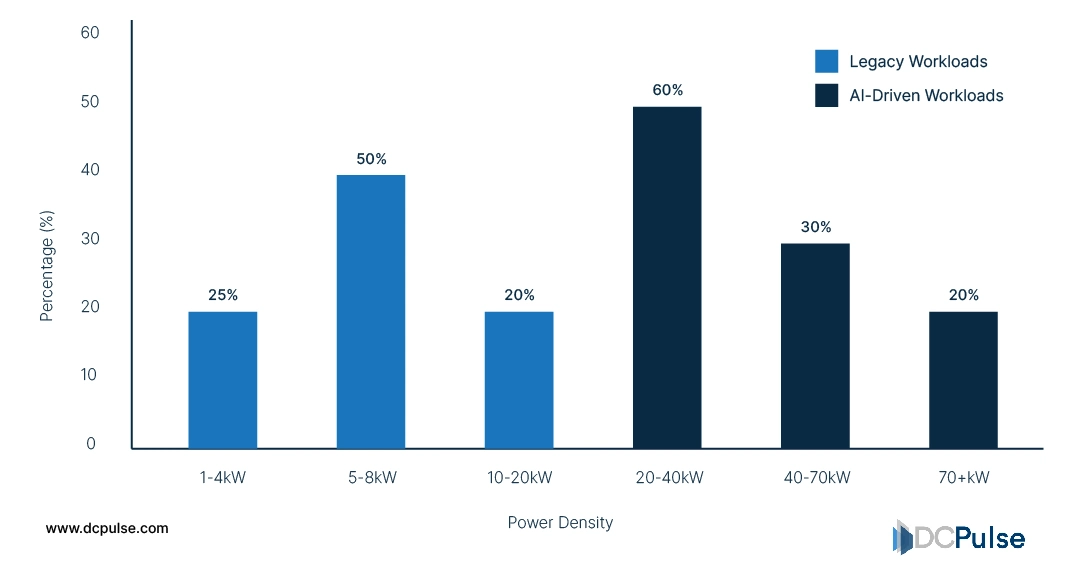

Hyperscale data center design is undergoing a fundamental shift as power density, the amount of electrical power drawn per rack, climbs rapidly beyond historic norms. For much of the 2010s and early 2020s, standard racks in cloud and enterprise facilities averaged 7-10 kW per rack. These densities were manageable with conventional power distribution and air-based cooling systems.

Today, AI and high-performance workloads are dramatically reshaping that baseline. Industry research shows that hyperscale data centers are now routinely operating in ranges far above legacy levels, with many facilities averaging 15-30 kW per rack, and clusters with 30-100 kW or higher becoming increasingly common in AI-centric deployments.

Rack density distribution - legacy vs AI-driven workloads

AI workloads consume 2-7x more power per rack than legacy systems

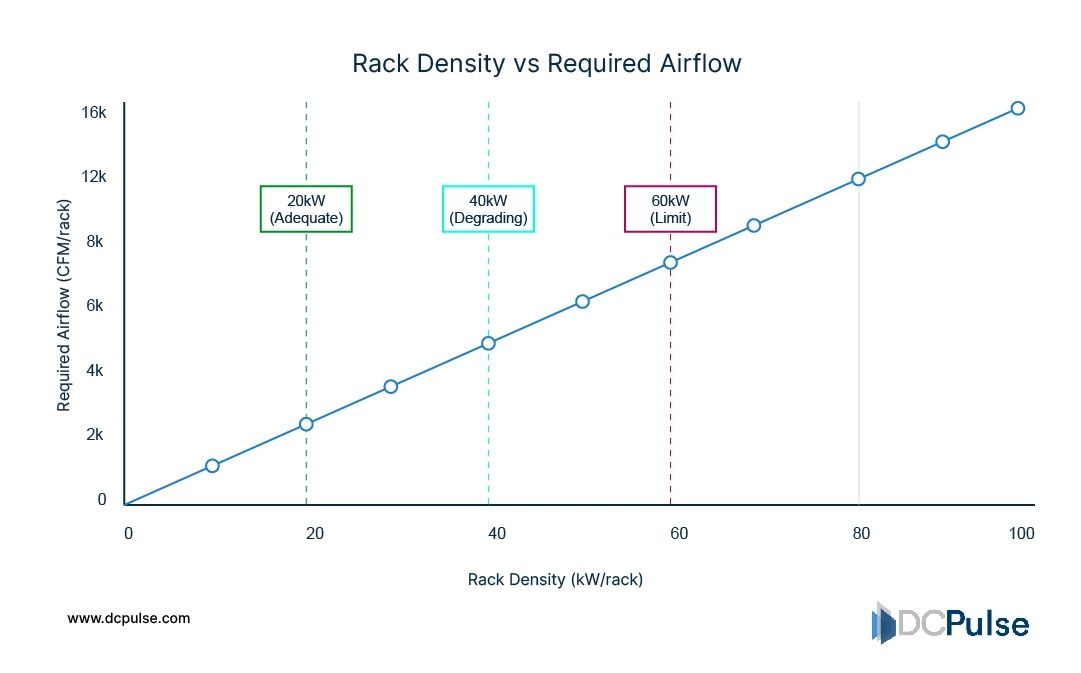

This jump in power per rack has a cascading effect on infrastructure. Electrical systems originally engineered for smoother, lower aggregate draws begin to exhibit stress under more concentrated loads. Busways, power distribution units (PDUs), and uninterruptible power supplies (UPS) must be oversized or reconfigured to deliver high currents safely and consistently across dense compute zones. At the same time, traditional air-cooling systems, effective up to roughly 20-35 kW per rack, struggle to maintain thermal stability as heat output escalates.

Cooling response curve - airflow limits vs rack density

Fan power scales cubically; air cooling degrades beyond 40 kW.

The mismatch between installed capacity and deployable capacity is now visible in many hyperscale campuses. Operators may have utility contracts and transformers capable of delivering tens of megawatts, yet are unable to translate that capacity into usable compute without redesigning parts of the facility. Cooling pathways become bottlenecks, airflow management turns fragile, and thermal hotspots increase operational risk.

As densities climb, the industry debate increasingly revolves around how much power a given square meter can tolerate rather than how much total campus capacity is available. This reality, where usable capacity lags headline capacity due to power and thermal constraints, defines the current landscape of hyperscale power density optimization.

Rebuilding the Stack for Concentrated Power

Operators and system designers are pursuing multiple technical frontiers to unlock higher usable power density that goes beyond traditional architectures. These innovations broadly fall into cooling breakthroughs, power delivery re-architectures, and intelligent thermal/power control mechanisms, all aimed at squeezing more compute per square meter without tipping into operational risk.

Liquid cooling, especially direct-to-chip (D2C) and immersion systems, is rapidly moving from edge deployments into mainstream hyperscale builds. Air simply can’t keep up with racks drawing 50-120 kW and beyond, and operators increasingly view liquids as the only scalable solution.

However, these technologies aren’t plug-and-play: they require bespoke fluid distribution systems, leak-safe infrastructure, and often new rack designs, all of which change how power density optimization is architected at scale.

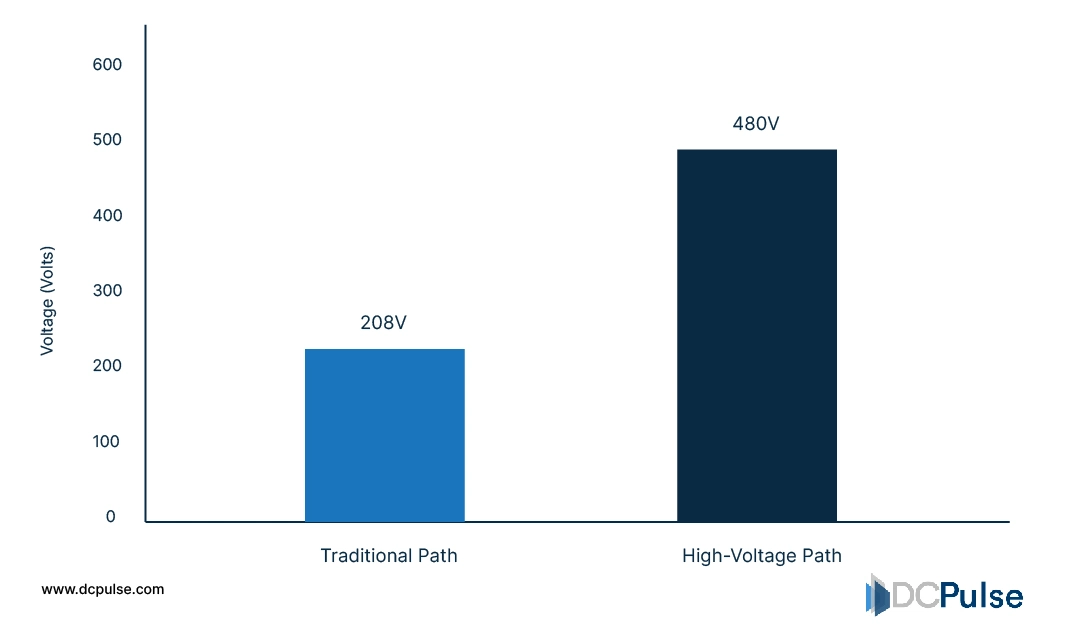

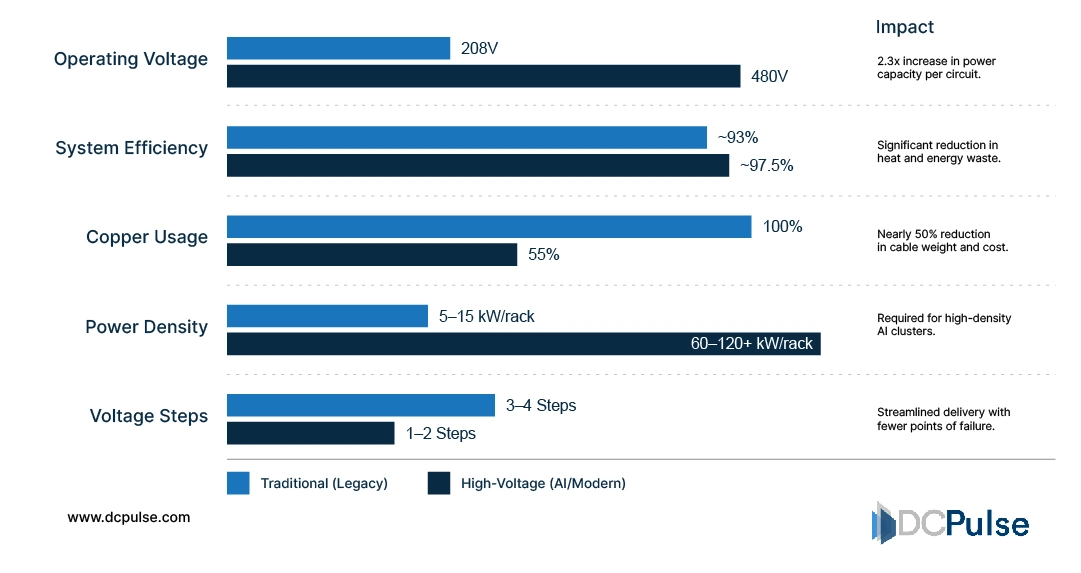

The second innovation vector lies in how power actually gets to the rack. Traditional distribution paradigms, low-voltage DC or multi-stage AC-DC conversion, hit physical limits as currents balloon with rising rack draws. Operators and standards bodies are increasingly exploring higher-voltage DC distribution and wide-bandgap semiconductor technologies like silicon carbide (SiC) and gallium nitride (GaN) to improve conversion efficiency and thermal performance.

Data Center Power Delivery - Voltage Comparison

These approaches reduce the number of power conversion steps, sharply lower losses, and enable more compact, efficient power infrastructure.

High-voltage DC (400-800 V) distribution is another key trend highlighted by industry assemblies like the Open Compute Project, reducing current requirements and enabling simpler, lighter busbars and fewer bulky components, which is critical when megawatt-class power must be delivered into dense environments.

Beyond raw hardware, hyperscale operators are layering intelligent control systems into cooling and power infrastructure. At the facility and cabinet level, adaptive thermal management uses real-time feedback to adjust coolant flow, fan speeds, and even rack workload placement for efficiency and safety. Some exploratory research suggests machine learning agents could optimize liquid cooling parameters across complex hardware stacks, balancing energy efficiency against thermal risk dynamically.

These innovations, cooling architectures, power delivery shifts, and intelligent controls, are not isolated. They form an interlocking ecosystem designed to make high power density not just technically feasible but operationally sound.

The next leap in hyperscale capability won’t come from bigger megawatt budgets alone, but from smarter and more integrated infrastructure that can handle concentrated power demands without compromise.

How Hyperscalers Are Acting on the Density Constraint

As power density moves from theoretical challenge to operational imperative, hyperscale players aren’t waiting; they’re acting. Across the industry, infrastructure choices today signal a shift toward designs that bake density into the blueprint rather than trying to retrofit it later.

One of the clearest examples is the mainstream adoption of liquid cooling. Operators like AWS and others are integrating both air-plus-direct-to-chip and full liquid systems in new facilities to manage heat from racks running well above traditional power levels.

This trend is becoming more than niche: industry forecasts show liquid cooling deployment in a growing share of hyperscale builds as rack power densities rise to 50-100+ kW and beyond.

Hyperscale Liquid Cooling Adoption Curve (2018-2019):

Colocation and hyperscale providers are including liquid-ready infrastructure as a standard rather than an add-on. Digital Realty and Equinix, for example, are marketing high-density suites and “AI-ready” facilities with power and cooling infrastructure explicitly designed for GPUs and accelerators.

Power delivery is also catching up. Collaborative initiatives like those emerging from the Open Compute Project are pushing higher-voltage distribution standards (e.g., ±400 VDC) and modular power racks, reducing conversion losses and physical bulk, which is critical for packing more usable power into dense halls.

Beyond cooling and distribution, the planning criteria themselves are changing. New campus designs evaluate deployable density per hall, not just headline megawatts, when selecting sites, shaping mechanical systems, electrical paths, and floor layouts for concentrated loads from the outset.

These moves reflect an industry quietly but intentionally rearchitecting infrastructure so that power density is a design objective, not an afterthought.

From Megawatts to Meaningful Density

Power density optimization is no longer a tactical upgrade; it is becoming a primary design variable for hyperscale growth. Over the next few years, operators that can reliably support higher densities will gain flexibility in site selection, build timelines, and capital efficiency, while those tied to legacy assumptions risk underutilized campuses.

Industry guidance from the Uptime Institute reinforces this shift, noting that usable capacity is increasingly constrained by cooling and power distribution rather than total megawatts available.

Strategically, this pushes hyperscalers toward fewer but more capable halls, designed around liquid readiness, simplified power paths, and density-aware layouts. Standards bodies such as the Open Compute Project are accelerating this transition by aligning vendors and operators around high-density-ready power architectures.

The takeaway is clear: future hyperscale advantage will not come from who secures the most power, but from who can concentrate, cool, and control it most effectively. Power density is no longer an engineering detail; it is a strategic constraint shaping the next phase of cloud infrastructure.