The world’s biggest AI models are growing faster than the machines meant to keep them cool. If you walk into a modern AI data center today, it doesn’t sound like the ones you remember. The air feels thicker. Heat isn’t being fought anymore; it’s being managed, recycled, and sometimes even sold.

The old methods, air ducts, fans, and raised floors, are struggling to keep up. Cooling, once an afterthought, has become a design discipline of its own. And as GPU racks climb past 100 kilowatts each, one question echoes through the industry,

Will air still be enough, or will liquid take over by 2030?

What’s keeping today’s data centers cool, and for how long?

Right now, air still runs most of the world’s data halls. Surveys of operators show that even as excitement builds, only a small share of data centers have actually gone liquid. Operators surveyed by the Uptime Institute say only a few of their data centers use liquid cooling today, but that’s starting to change fast.

Current Usage and Consideration of Direct Liquid Cooling (DLC) in Data Centers

So why are operators rethinking cooling? It’s the compute density.

Modern AI servers pack multiple high-power accelerators, NVIDIA H100/H200 variants, for example, run at hundreds of watts each and up to ~700 W in high-power SKUs. Put eight of those GPUs into a single server, and you’re already at several kilowatts just for the accelerators. NVIDIA’s DGX/HGX reference layouts show how quickly that adds up to tens of kilowatts per rack, depending on configuration.

_in_Data_Centers-1.jpg)

At that scale, it’s a matter of physics more than engineering. Air simply can’t move that much thermal load efficiently. Multiple dense servers per rack, heavy networking and storage gear, and inevitable power-conversion losses push total IT load into the dozens of kilowatts, and in AI or HPC setups, into the 70-150 kW range that once seemed unthinkable.

Evolution of Data Center Rack Power Density (kW)

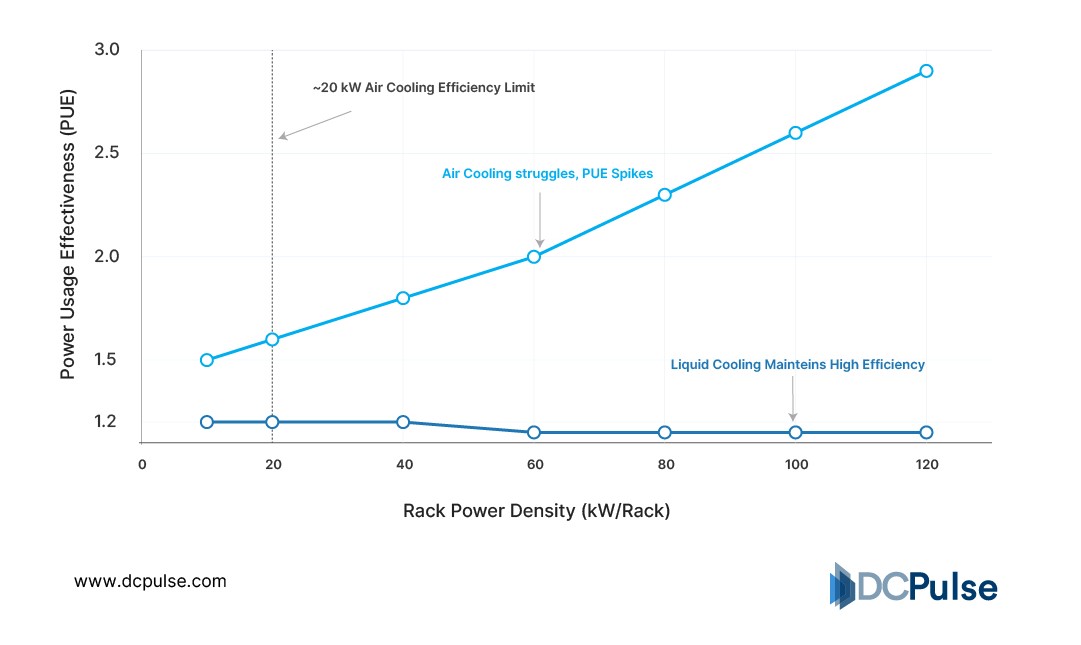

That shift reshapes the PUE equation as well. Air systems can still be efficient at moderate densities, but once you hit those extreme kW figures, the cost of moving conditioned air, and the extra chillers and compressors that imply, spikes.

_-21.jpg)

PUE vs. Rack Density

That’s the practical reason hyperscalers and large operators are testing cold plates, immersion and other liquid approaches; it lets operators operate denser racks without steep jumps in cooling cost. Microsoft, Google and other hyperscalers have published or piloted liquid approaches precisely for those reasons.

What new cooling ideas are rewriting the rules?

The shift toward liquid cooling isn’t just about pipes and pumps anymore; it’s about precision. The new generation of cooling technologies is being engineered for scale, efficiency, and sustainability, all at once.

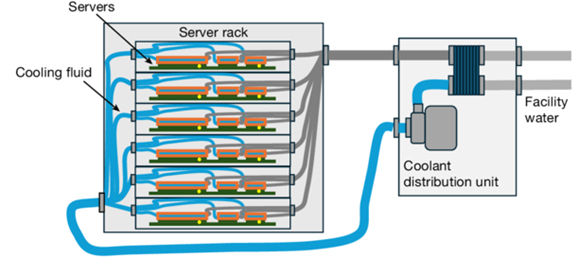

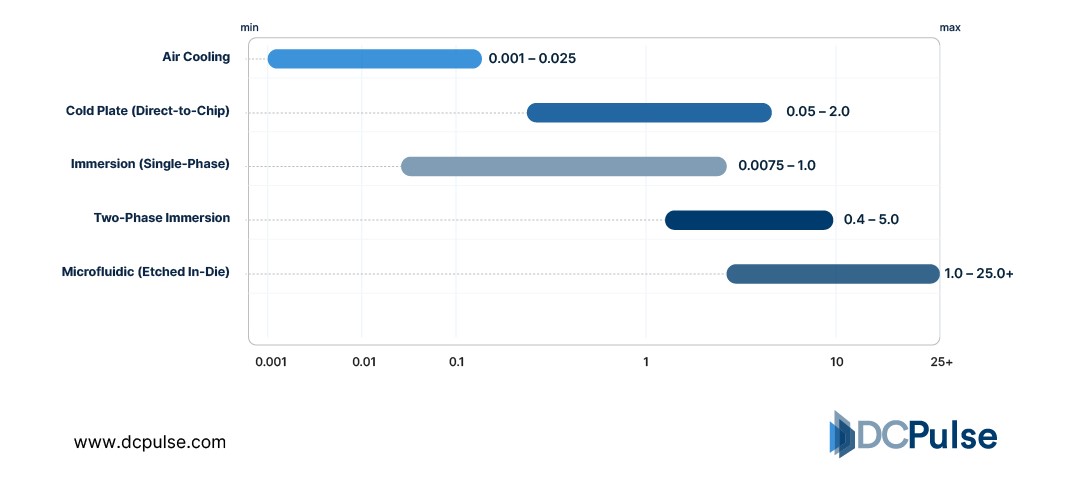

Direct-to-chip (D2C) is the most immediate bridge between air and full immersion. Vendors like LiquidStack are proving how far it can go. Earlier this year, the company launched its GigaModular CDU, a compact but industrial-grade cooling distribution unit that supports up to 10 MW of capacity for multi-megawatt AI rows, bringing the liquid closer to the rack with minimal footprint.

These systems don’t just move heat efficiently; they give operators the flexibility to retrofit air-cooled halls without tearing down the entire thermal loop.

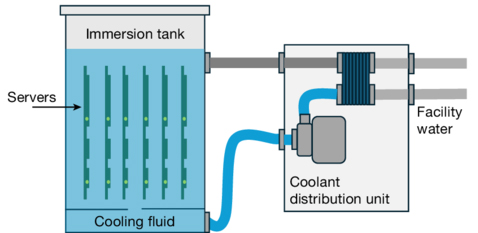

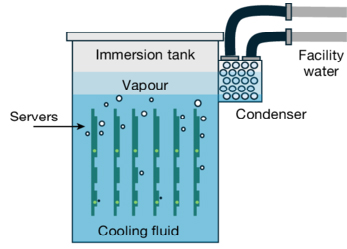

Then comes immersion cooling, where servers bathe directly in dielectric fluids, single-phase systems circulate liquid for continuous convection, while two-phase designs let the fluid boil and condense for higher heat transfer.

a. Cold-Plate Cooling / Direct-to-Chip

b. Single-Phase Immersion Cooling

c. Two-Phase Immersion Cooling

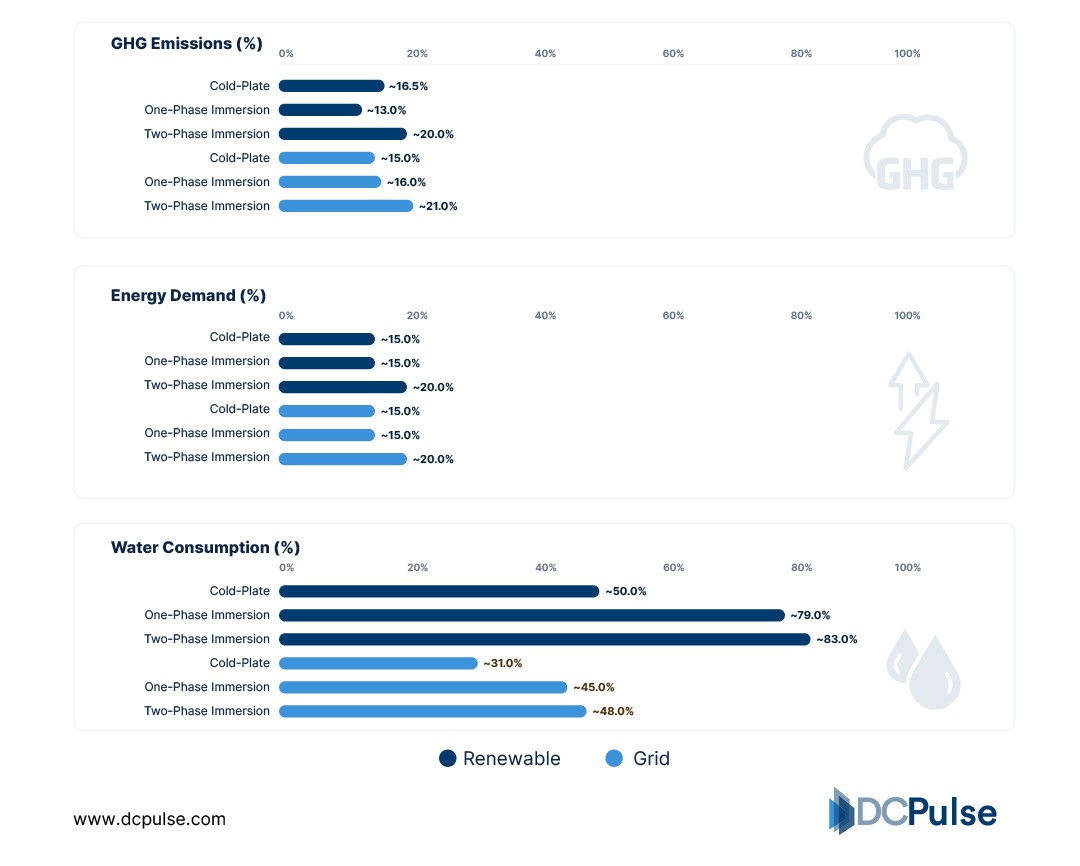

In Microsoft’s 2024 life-cycle study, both methods showed clear gains over air cooling: 15-21% lower emissions, 15-20% less energy use, and 31-52% less water consumption.

Life-Cycle Impact Reduction Compared to Air Cooling

Submer’s hyperscale immersion pods, meanwhile, are being pitched as drop-in AI bays for operators who want to scale quickly without waiting for new builds, a sign of how mainstream immersion is becoming.

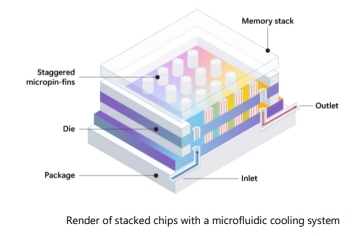

At the chip level, microfluidic cooling is redefining what “contact cooling” means. Microsoft’s partnership with Corintis, a Swiss startup, has yielded a bio-inspired microchannel system etched into silicon, removing heat directly at the transistor layer. The prototype achieved three times better heat removal than a standard cold plate and cut local temperature rise by 65%.

At the chip level, microfluidic cooling is redefining what “contact cooling” means. Microsoft’s partnership with Corintis, a Swiss startup, has yielded a bio-inspired microchannel system etched into silicon, removing heat directly at the transistor layer. The prototype achieved three times better heat removal than a standard cold plate and cut local temperature rise by 65%.

Microfluidics Cooling System

Finally, edge and modular deployments are proving that liquid cooling isn’t limited to hyperscalers. Companies like Iceotope and Submer are shipping sealed, immersion-ready containers for colocation and industrial edge workloads, fully assembled, fluid-filled, and factory-tested. These prefabricated pods give enterprises a shortcut to high-density AI computing without the construction delays of a conventional data hall.

Together, these technologies are transforming cooling from a back-end facility function into an integrated design discipline, one that determines not just performance, but sustainability and real estate economics.

Cooling Efficiency by Method

Who’s betting big on liquid Cooling, and why now?

In the race to keep AI engines humming, big players are placing serious bets on cooling. These are not incremental tweaks; they’re strategic moves that show the industry knows the shift from air to liquid isn’t optional anymore.

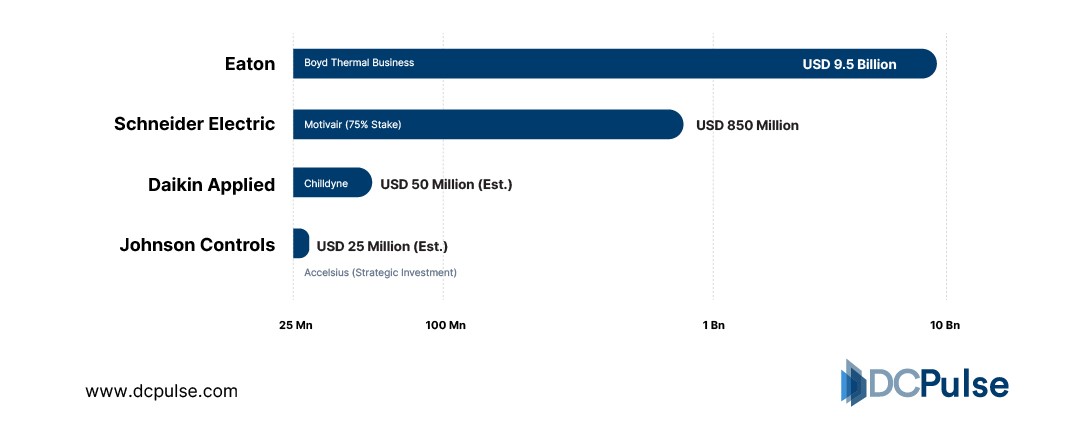

Major Cooling Investment Size

Eaton Corporation signed a monumental agreement to acquire Boyd Thermal for USD 9.5 billion; a deal explicitly aimed at marrying its electrical power competency with liquid-cooling capabilities for data centres. Boyd’s forecasts show USD 1.7 billion in liquid-cooling sales by 2026, making the scale of this move very clear.

Daikin Applied acquired liquid-cooling specialist Chilldyne, whose negative-pressure direct-to-chip distribution units address one of liquid cooling’s biggest operational fears, leaks.

Schneider Electric, combined with Motivair, is launching a full liquid-cooling portfolio certified for high-density AI racks (100 + kW per rack) and engineered with chip-vendor collaboration.

Johnson Controls made a strategic investment in two-phase direct-to-chip cooling specialist Accelsius, signalling belief in fluid-phase change tech as the next frontier.

Why all this heavy lifting now?

Because liquid cooling is no longer “nice to have,” it’s becoming essential. With hyperscale racks shrinking in floor area and growing past 100 kW each, the infrastructure cost, energy burden, and sustainability risks of air-only cooling are now business-critical.

As one industry analyst put it, “You can’t build a data centre with a three-to four-year horizon any more without imagined liquid cooling capability.”

These moves also reflect vendor consolidation, supply-chain realignment and preparation for the next wave, AI factories, distributed cloud hubs and edge sites that demand compact, high-density, thermally efficient designs.

The acquisitions and portfolios above show where the industry thinks the value will be by 2030: Liquid first, with air reserved for legacy or low-density loads.

By 2030, will liquid cooling dominate, or just divide the market?

If 2025 was the year liquid cooling went from pilot to plan, 2030 will be when it becomes policy. Every hyperscaler now treats liquid as standard in new AI builds. The only question is how far that dominance extends beyond the megawatt halls.

Research from Straits Research shows the liquid-cooling market growing at double-digit rates through 2030, driven by AI’s rising rack densities and energy limits. Microsoft’s 2024 life-cycle study found that cold-plate and immersion systems cut energy use by 15-20 %, emissions by up to 21 %, and water consumption by over 30 % compared with top-tier air cooling. That efficiency makes the economic case hard to ignore.

Still, air cooling isn’t disappearing. For edge, enterprise, and retrofit sites below 30 kW per rack, improved air systems and free-air designs remain simpler and cheaper. The market will likely split, liquid for dense AI cores, air for the rest.

By 2030, liquid cooling will lead new construction, but the real win is balance, choosing the right medium for the right workload, not declaring a single victor. Air and Liquid, together, complete the thermodynamic map of modern computing.